Hello,

After avoiding shaders for ages I finally took the step to look into them. I specifically took a look at these tutorial series

http://cgcookie.com/unity/cgc-courses/noob-to-pro-shader-writing-for-unity-4-beginner/. After that I fiddled around and learned some more about manipulating vertices with heightmaps and the like. It's pretty fun.

I've looked around on the interwebz but I cannot find anwers to some of my (seemingly) simple questions.

To add a little more context to my questions; I'm using Unity3D where shaders are attached to a material, the material is attached on the mesh.

1. Scene-wide shadersThis might be specific to unity, but if I understand correctly, it is not possible to have a shder affect all the objects in your scene?

I'm wondering how certain effects that apply to multiple objects are handeled.

Take this animal crossing shader for example, it displaces all the objects on the Y axis based on it's distance to the camera. I actually managed to create a similiar effect, but had to apply the shader on all my scene's objects seperately, which seems annoying.

Additionaly, I encountered some shader effects which span over multiple objects, which makes it even more confusing.

Can anyone enlighten how this is done?

2. Effects surpassing mesh/texture boundsI don't fully understand the concept of how some shader apply effects that surpass their texture's bounds. If I would apply a blur or a glow to a texture, how could the effect range beyond the object's bounds?

I understand how a fullscreen glow effect just blurs the screen buffer on a texture.

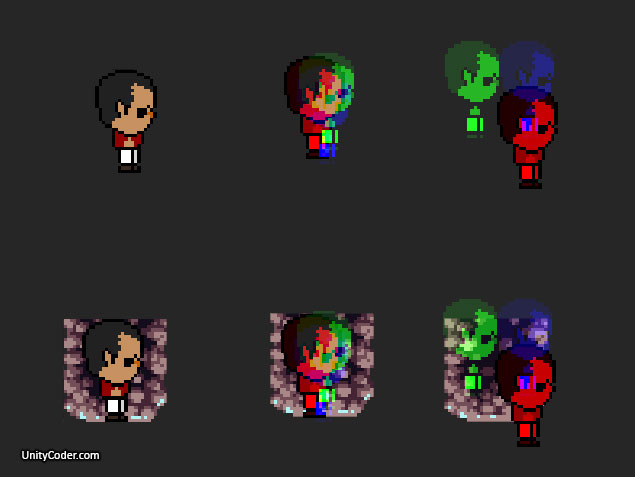

http://unitycoder.com/blog/2014/01/06/sprite-rgb-split-shader-test/

http://unitycoder.com/blog/2014/01/06/sprite-rgb-split-shader-test/This is a decent example, the effect is expanding alot beyond the initial texture.

When I look at the source code my still limited shader knowledge makes me guess that he is expanding the vertices to make room, but I am not completely sure.`

3. Shaders on shared materialsHow come I can apply a 2 different colors on 2 different objects using the same material. I would expect that they would both have the same color since the shader is attached to that specific material.

4. vertex shader neighboursIf I would like to apply a shader to only effects the top vertices of a mesh, how would I handle that? Since the vertex shader is handled on a per-vertex basis you don't have any information about the mesh's other vertices right? I'm guessing you could extract the information from normals or mark certain vertices with vertex colors. But I was just wondering if my assumptions are correct or not.

5. vertex world positionThis one is shamefully specific. I made a shader which takes a mesh and applies a "wave" effect to it. I had to convert the vertices from object space to world space. But I actually don't have a clue what to do when I pass them to the fragment shader.

// Unlit shader. Simplest possible textured shader.

// - no lighting

// - no lightmap support

// - no per-material color

Shader "Custom/Wave" {

Properties {

_MainTex ("Base (RGB)", 2D) = "white" {}

_Color( "Color", Color) = (1.0, 1.0, 1.0, 1.0)

_Frequency( "WaveFrequency", float) = 10

_Amplitude( "Amplitude", float) = 1

_Speed( "Speed", float ) = 50

}

SubShader {

Tags { "RenderType"="Opaque" }

LOD 100

Pass {

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

struct appdata_t {

float4 vertex : POSITION;

float2 texcoord : TEXCOORD0;

};

struct v2f {

float4 vertex : SV_POSITION;

half2 texcoord : TEXCOORD0;

};

uniform sampler2D _MainTex;

uniform float4 _MainTex_ST;

uniform float4 _Color;

uniform float _Frequency;

uniform float _Amplitude;

uniform float _Speed;

v2f vert (appdata_t v)

{

v2f o;

//wave

float4 worldV = mul( _Object2World, v.vertex );

float speed = _Time * _Speed;

float frequency = (worldV.x + worldV.y ) / _Frequency ;

float amplitude = _Amplitude;

worldV.z += sin( frequency + speed ) * amplitude;

worldV = mul( _World2Object, worldV );

o.vertex = mul(UNITY_MATRIX_MVP, worldV);

o.texcoord = TRANSFORM_TEX(v.texcoord, _MainTex);

return o;

}

fixed4 frag (v2f i) : SV_Target

{

fixed4 col = tex2D(_MainTex, i.texcoord);

col *= _Color;

return col;

}

ENDCG

}

}

}

Applying the shader as it currently is scales the mesh by a large amount.

These questions might seem awfully basic,but I just cannot find any resources on them.

Thanks in advance.

Developer

Developer Technical

Technical (Moderator: ThemsAllTook)Getting started with shaders

(Moderator: ThemsAllTook)Getting started with shaders Developer

Developer Technical

Technical (Moderator: ThemsAllTook)Getting started with shaders

(Moderator: ThemsAllTook)Getting started with shaders