|

szczm_

Guest

|

|

« on: April 08, 2019, 09:06:51 AM » |

|

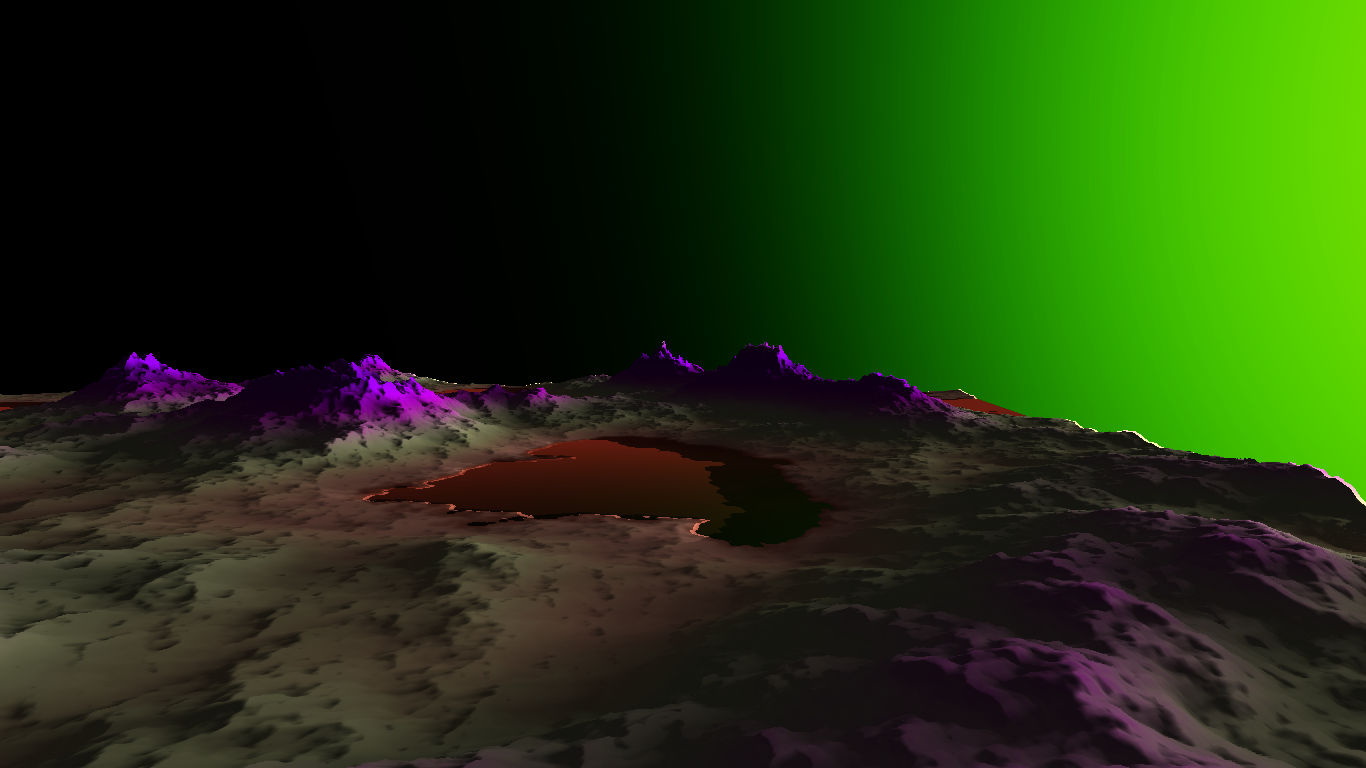

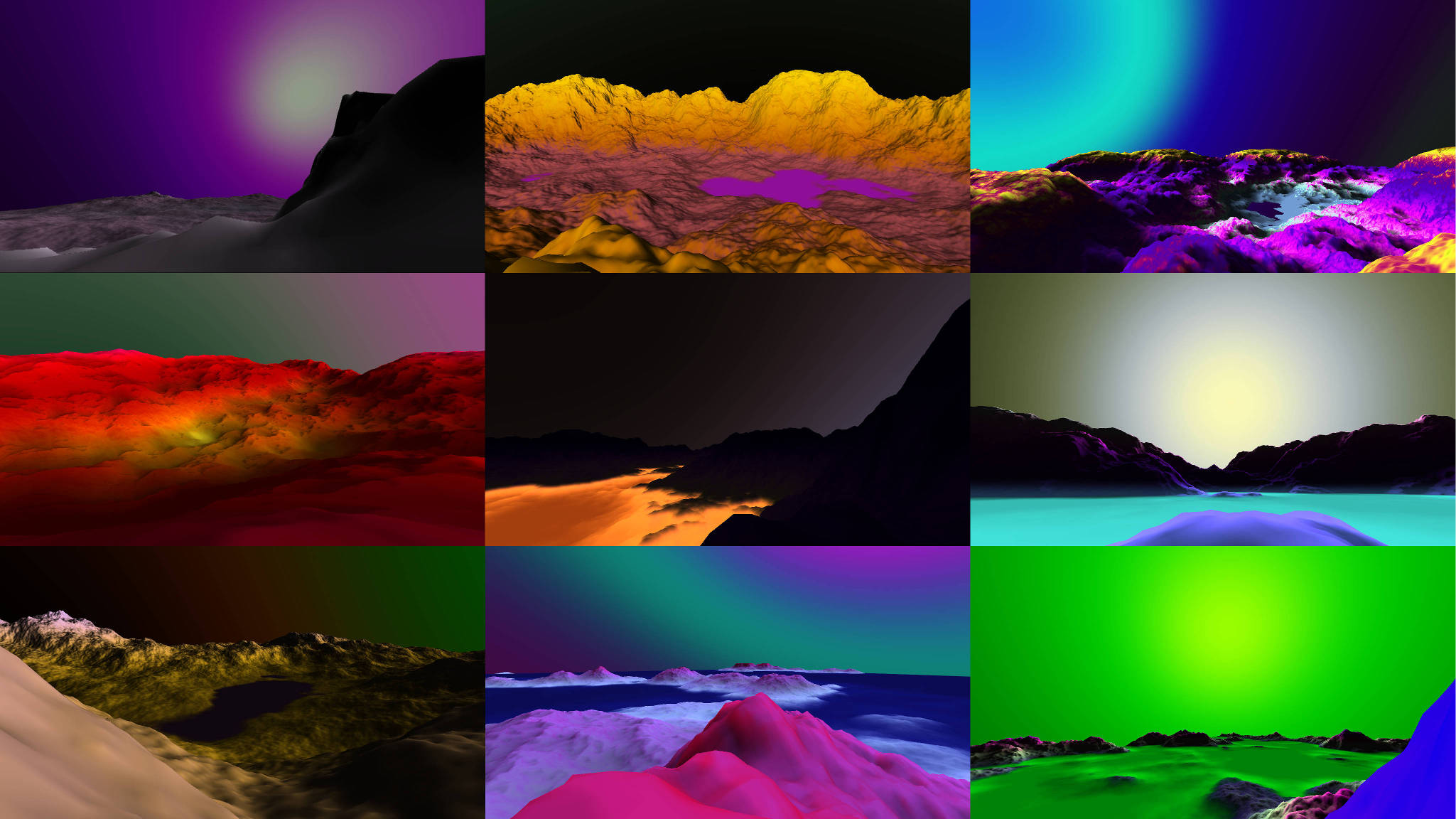

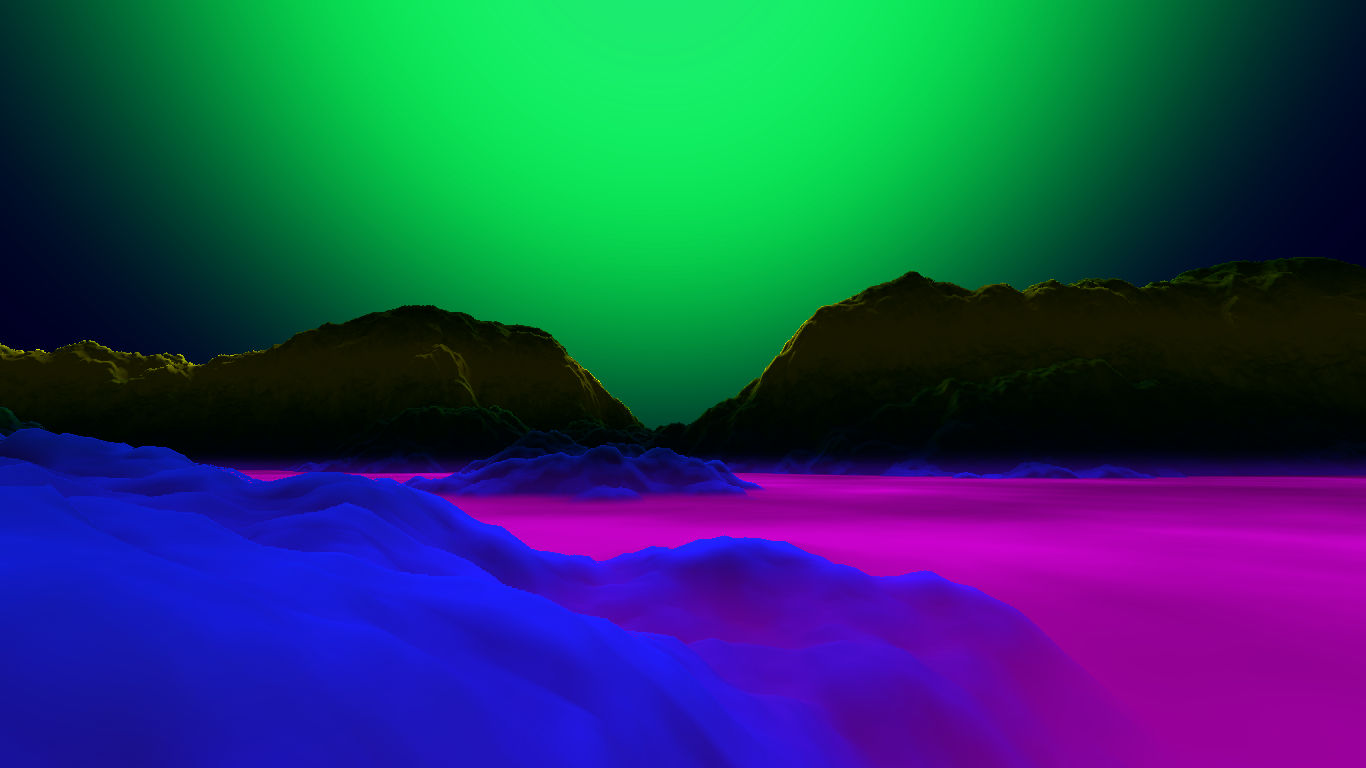

Hi! Since I don't think this fits into any other board, and I've been told on the Discord that this might be the place to go, then here it is. If this is not the right board, let me know. To say it shortly, in 2018 (June maybe?) I've been developing a procedural landscape generator that renders the world sphere tracing (a variant of ray marching). It's been a little side project I've been developing for about a week, to test my procgen skills and boost my motivation. Everything you see is procedural, generated in Lua (LÖVE) first, and then rendered in the shader (GLSL). I've recently shared some screenshots on a subreddit, and I've been asked for source code, so I've posted it on my repository. Here are some screenshots:    Since I described everything in detail in this repository, and I don't want to copy everything here, here's the repository: https://github.com/szczm/raymarching-landscape-explorerHere are the technical details of how it works: https://github.com/szczm/raymarching-landscape-explorer/blob/master/TECHNICAL.mdYou only need a (maybe) good GPU to render it, as it's a really needy algorithm. It's something that I'm a bit proud of, and I just wanted to share it with you all — maybe it inspires someone, maybe you'll do something amazing with it (although the code's a bit ugly). If you liked it then let me know, if you didn't — let me know, if you have any questions — you know what to do, and if you want to follow my future doings, games, experiments, whatever I do — I try to post regularly on Twitter, so you can follow me there, at @szczm_  I also have more screenshots there if anyone's interested. Thank you for your attention, and have a wonderful day! |

|

|

|

|

Logged

Logged

|

|

|

|

|

buto

|

|

« Reply #1 on: April 12, 2019, 12:25:04 PM » |

|

Very nice pictures and interesting approach! Great inspiration for thinking about some shader based ray tracing...

Thanks for documenting your approach so well.

Regards

|

|

|

|

|

Logged

Logged

|

|

|

|

|

szczm_

Guest

|

|

« Reply #2 on: April 12, 2019, 11:41:49 PM » |

|

Thank you! I tried my best. Some of the methods should not be replicated verbatim, I will admit that, but with some optimization/modification they could be very well used in other projects  Even the brute force sampling of the heightmap texture is surprisingly efficient for a brute force approach. |

|

|

|

|

Logged

Logged

|

|

|

|

|

fluffrabbit

Guest

|

|

« Reply #3 on: April 13, 2019, 06:22:55 AM » |

|

This looks amazing! Reminds me of Marble Marcher. Since you mentioned in the documentation that performance can slow down with large flat surfaces, I am wondering what your futurological prediction is for the near future of VR and realistic rendering. Will we be using raytracing like this, or will PBR be better for the general case? Perhaps some sort of hybrid?

|

|

|

|

|

Logged

Logged

|

|

|

|

|

szczm_

Guest

|

|

« Reply #4 on: April 13, 2019, 07:57:39 AM » |

|

This looks amazing! Reminds me of Marble Marcher. Since you mentioned in the documentation that performance can slow down with large flat surfaces, I am wondering what your futurological prediction is for the near future of VR and realistic rendering. Will we be using raytracing like this, or will PBR be better for the general case? Perhaps some sort of hybrid?

Thanks! I just love it when I posted this on the internet, and people from around the world mention similar projects that either did exactly what I did, but better, or are an inspiration for me  As for the second part of your reply — this particular (my) implementation of sphere tracing is very simple, and not only things like the flat plane case can be taken into account and compensated for, there are many other optimizations available to implement; and not only sphere tracing itself has already been proven to be usable realtime, but so has been (almost realtime) path tracing and (in some use cases) ray tracing. PBR of course is the method for now, moreso that it is basically an improved model of what we already did for many years, and any —tracing requires - in my opinion, with my current understanding - at least a medium makeover of the rendering, material etc. pipeline, and the hardware is yet not fast enough. Thus, PBR for now  Also, polygonal meshes. They are the kryptonite of the tracing world for now. EDIT: Oh, and yeah, hybrid solutions are already available and widely used, e.g. Unreal's soft shadows  |

|

|

|

|

Logged

Logged

|

|

|

|

|

fluffrabbit

Guest

|

|

« Reply #5 on: April 13, 2019, 10:33:34 AM » |

|

Marble Marcher is technically better, but you look like a badass for doing this in LӦVE. Also your explanations are very simple and straightforward for others to learn from. I am very new to this myself. I'm trying to dig into backend rendering, preparing to implement PBR and limited bone animations on top of my current unshaded GL renderer, with heavy inspiration from other people's code. It's a long journey for those who aren't mathematically inclined, so I look at sphere tracing, which is lightyears ahead, and I don't know what to think. The little lines and balls have to inch their way through the geometry, and that's relatively slow, but at the same time there's the NVidia RTX and all that marketing hucksterism, and Moore's Law and Ray Kurzweil's futurisms and all that. So I'm thinking, arbitrary polygonal meshes and fine-grained voxel meshes are the way to go, and ray/sphere/path-marching/tracing makes it that much better. I don't know if these tracing/marching techniques improve graphical quality overall these days, but at lower resolutions ( like 960x540) it may be plausible. Shadows are just a small part of graphics. Show me a realtime rendered double-slit experiment (shining a laser through a couple of really small slits to get a barcode pattern) and then I'll be convinced that raytraced shadows offer a clear advantage over buffered shadows absent other tracing/marching techniques. |

|

|

|

|

Logged

Logged

|

|

|

|

|

szczm_

Guest

|

|

« Reply #6 on: April 13, 2019, 11:26:50 AM » |

|

The little lines and balls have to inch their way through the geometry, and that's relatively slow, but at the same time there's the NVidia RTX and all that marketing hucksterism, and Moore's Law and Ray Kurzweil's futurisms and all that. So I'm thinking, arbitrary polygonal meshes and fine-grained voxel meshes are the way to go, and ray/sphere/path-marching/tracing makes it that much better. I don't know if these tracing/marching techniques improve graphical quality overall these days, but at lower resolutions ( like 960x540) it may be plausible. Shadows are just a small part of graphics. Show me a realtime rendered double-slit experiment (shining a laser through a couple of really small slits to get a barcode pattern) and then I'll be convinced that raytraced shadows offer a clear advantage over buffered shadows absent other tracing/marching techniques. I meaaaan with sphere tracing you can also do things like real reflections (no faking - realtime reflections, self reflections, multiple reflections, exact reflections that take into account things like - I don't know - position?), very accurate world space ambient occlusion, and shadows themselves are rather accurate soft shadows:  Screenshot from some other project I did long time ago Screenshot from some other project I did long time agoAnd all of these are very easy to implement and a big step from traditional methods. The main problem is performance, which (when considering a general use case) is not only quite close to some path tracing methods used in the industry right now, but it's still not fast enough when compared to traditional rendering. I guess we just have to wait? But considering how the Unity HDRP DXR preview just came out and seeing what people are doing with it, we won't have to wait long  |

|

|

|

|

Logged

Logged

|

|

|

|

|

fluffrabbit

Guest

|

|

« Reply #7 on: April 14, 2019, 07:13:26 AM » |

|

But considering how the Unity HDRP DXR preview just came out and seeing what people are doing with it, we won't have to wait long What Unity is doing appears to be a hybrid approach. To achieve a real-time frame rate while upholding quality, our Unity Labs researchers developed an algorithm

<snip>

With this approach, the visibility (area shadow) can be separated from the direct lighting evaluation, while the visual result remains intact. Coupled with a denoising technique applied separately on these two components, we were able to launch very few rays (just four in the real-time demo) for large textured area lights and achieve our 30 fps target. (emphasis added) Perhaps I'm misunderstanding something, but four rays is not raytraced rendering. This is PBR with extra sauce. Sphere marching is the real deal. Perhaps a hybrid solution could employ lower-resolution sphere marching elements (to fill in spaces between edges or something). This confirms my suspicions that the RTX was not designed with a fully raytraced realtime pipeline in mind. Now I'm wondering if there is a way to realtime render arbitrary polygonal geometry using sphere marching. For cases involving opaque reflection (not refraction), if you want to find f(x, y, z) where f relates to whether or not a point is inside a triangle, define the triangle with vectors xyz1, xyz2, xyz3 and say that the point is inside if it is within the triangle's screen-space bounds and farther than the triangle's depth at that point. This could also be extended to a whole mesh, where the mesh is rendered to the depth buffer and points are tested against that. But hey, I'm an idiot and I probably misunderstand the whole concept. |

|

|

|

« Last Edit: April 14, 2019, 07:41:42 AM by fluffrabbit »

|

Logged

Logged

|

|

|

|

|

szczm_

Guest

|

|

« Reply #8 on: April 14, 2019, 03:20:36 PM » |

|

Now I'm wondering if there is a way to realtime render arbitrary polygonal geometry using sphere marching.

For cases involving opaque reflection (not refraction), if you want to find f(x, y, z) where f relates to whether or not a point is inside a triangle, define the triangle with vectors xyz1, xyz2, xyz3 and say that the point is inside if it is within the triangle's screen-space bounds and farther than the triangle's depth at that point. This could also be extended to a whole mesh, where the mesh is rendered to the depth buffer and points are tested against that.

But hey, I'm an idiot and I probably misunderstand the whole concept.

Well, you're not wrong, although the process that you're describing is closer to just normal rasterization, or ray tracing. Main problem is, if we're strictly talking sphere tracing, it works on signed distance fields/functions, and these return a distance to the closest point in world (incl. the mesh that we want to render). A traditional polygonal mesh has no such information included. AFAIK, to get one into a sphere tracer, the current approach is to process it into a 3D texture containing distance data, so once you're inside the bounding box in world space, you can sample the texture to get correct distance info. The problem is, this is just a different realm. You could easily render a 10k-vert textured animated zombie right now in Unity or something similar in maybe 10 minutes. All the tools are ready, the hardware is ready, the GPU is made for rendering triangles. And what if you'd want to use sphere tracing? My off-the-top-of-my-head approach would be an animated (high frequency), high resolution 3D texture. Not only will you probably lose precision, you also will need lots of memory, and since you won't be interpolating bone positions, rotations, doing IK and all that stuff, it would be more like a sprite animation — unless you want to morph the mesh like a tesseract, then no problem. Of course it's a lot easier for static meshes, and that's already been done many times. But that's again just distance data, out of which you trace towards a point (position) in space. Getting a normal vector is stupid easy (you make a gradient using the SDF, AKA "towards which direction does the SDF change the fastest"), but how do you store UV data? Other information? Density (which is actually a cool, doable thing, but requires more memory again), material properties, etc.? And if you'd ever think "meh, I'll just convert the mesh into triangles and put the triangles as an SDF into the shader", not only do you get the problem of how you'd put it in (and the solution is not a big array of triangles, nor is it a very big equation that includes each triangle), but our current algebra (or sphere tracing, or shaders) is not really good at calculating a distance to even a single triangle, nevermind the sign. But ray tracing is good at zapping triangles with rays, although a tad slow, and we've been using it for ages now  Also, I'm also pretty sure that one day we won't be using triangles, but voxels or point clouds, or other atomic system — why lose precision in the first place?  |

|

|

|

|

Logged

Logged

|

|

|

|

|

fluffrabbit

Guest

|

|

« Reply #9 on: April 14, 2019, 04:08:02 PM » |

|

Main problem is, if we're strictly talking sphere tracing, it works on signed distance fields/functions, and these return a distance to the closest point in world (incl. the mesh that we want to render). A traditional polygonal mesh has no such information included. AFAIK, to get one into a sphere tracer, the current approach is to process it into a 3D texture containing distance data, so once you're inside the bounding box in world space, you can sample the texture to get correct distance info.

The problem is, this is just a different realm. You could easily render a 10k-vert textured animated zombie right now in Unity or something similar in maybe 10 minutes. All the tools are ready, the hardware is ready, the GPU is made for rendering triangles. And what if you'd want to use sphere tracing? My off-the-top-of-my-head approach would be an animated (high frequency), high resolution 3D texture. Not only will you probably lose precision, you also will need lots of memory, and since you won't be interpolating bone positions, rotations, doing IK and all that stuff, it would be more like a sprite animation — unless you want to morph the mesh like a tesseract, then no problem.

Of course it's a lot easier for static meshes, and that's already been done many times. But that's again just distance data, out of which you trace towards a point (position) in space. Getting a normal vector is stupid easy (you make a gradient using the SDF, AKA "towards which direction does the SDF change the fastest"), but how do you store UV data? Other information? Density (which is actually a cool, doable thing, but requires more memory again), material properties, etc.? There was a project a while back called Unlimited Detail, which was just an efficient SVO renderer that the company claimed would "revolutionize gaming". It could do something like 640x480 at 60 fps on a laptop. Conventional raytracing with a single reflection would take, I dunno, twice the render time, but GPUs are twice as fast these days so it's feasible. So let's say static meshes are turned into 3D textures and rendered with sphere marching, which offers a performance gain. For animated meshes, just render out a 3D texture for each frame, so 60 * 4 seconds is 240 3D textures, each one using GPU compression to save space. Maybe they're all generated at load time. Is this not the very imminent future of gaming? |

|

|

|

|

Logged

Logged

|

|

|

|

|

qMopey

|

|

« Reply #10 on: April 14, 2019, 09:59:16 PM » |

|

No, it is not the imminent future because 3D textures take too much memory. Going from N^2 to N^3 is a massive jump. Also, this isn't the "unlimited detail" project you're talking about... Is it? http://www.codersnotes.com/notes/euclideon-explained/ |

|

|

|

|

Logged

Logged

|

|

|

|

|

fluffrabbit

Guest

|

|

« Reply #11 on: April 14, 2019, 11:38:25 PM » |

|

No, it is not the imminent future because 3D textures take too much memory. Going from N^2 to N^3 is a massive jump. Here are some figures for that. Using their example of a 256^3 texture taking up 300 KB, my 240 frame example would take up about 70 MB at a low resolution. Using their greatest compression ratio of 0.59 bits per voxel, a 1024^3 texture would take up about 76 MB * 240 frames = about 1.8 GB. Completely feasible. For reference, my Intel Atom GPU from 2015 has 256 MB VRAM, so extrapolate that to a modern card that's actually half-way decent. That's the one. |

|

|

|

« Last Edit: April 14, 2019, 11:55:11 PM by fluffrabbit »

|

Logged

Logged

|

|

|

|

|

szczm_

Guest

|

|

« Reply #12 on: April 14, 2019, 11:53:53 PM » |

|

(I wrote this response before @fluffrabbit posted his, but it's still somewhat valid) As a quick thought experiment, let's say we have a 256³ (I guess we could interpolate), 16bit (half precision, why not), single 3D texture. With no compression, that's 32 MB for a single frame of a single mesh. Let's assume what you ( @fluffrabbit) said, 60 * 4, and generating this realtime. That's 32 MB (of course no compression) every 1/60th of a second, so 1920 MB every second, generated and passed to GPU on the fly. And that's no instancing, single mesh. Even if we did 30Hz (which would… well, look bad), you still have a GB of data flying around every frame. And as for the article @qMopey linked to, it mentions Dreams, which if I remember correctly uses sphere tracing, and they do have animations, but they do lots of things to make it work, and it's only on PS4. I don't remember if they ever explained animations, but you can't import skeletal animations into the engine, and I'm guessing ingame it's more like a skeleton made up of static meshes (which are in fact 3D textures or voxel based). There's an amazing talk by Alex Evans who explained how they approach graphics in their game (there's probably a fair bit of it explained, but I haven't watched it in a while): |

|

|

|

|

Logged

Logged

|

|

|

|

|

fluffrabbit

Guest

|

|

« Reply #13 on: April 15, 2019, 12:36:53 AM » |

|

Interesting talk. Parallax occlusion mapping was mentioned, which doesn't give you raytraced/sphere-marched reflections. They went with point cloud billboard splatting at the time he gave the talk, which looks artsy but also doesn't have all the same light features.

|

|

|

|

|

Logged

Logged

|

|

|

|

|

Developer

Developer Technical

Technical (Moderator: ThemsAllTook)Raymarching procedural landscape generator (PRETTY PICTURES INSIDE)

(Moderator: ThemsAllTook)Raymarching procedural landscape generator (PRETTY PICTURES INSIDE) Developer

Developer Technical

Technical (Moderator: ThemsAllTook)Raymarching procedural landscape generator (PRETTY PICTURES INSIDE)

(Moderator: ThemsAllTook)Raymarching procedural landscape generator (PRETTY PICTURES INSIDE)