|

DDRKirby(ISQ)

|

|

« on: May 24, 2021, 01:29:28 PM » |

|

Welcome to Rhythm Quest! About the gameRhythm Quest is an upcoming 2-button rhythm platformer game being developed by DDRKirby(ISQ).Jump, attack, and fly to the beat to get past challenging obstacles in this rhythm-based twist on a traditional "runner" game. Each type of enemy requires different button sequences to defeat: Wings offer extra mid-air jumps, while flight paths require you to hold down the jump button: Wings offer extra mid-air jumps, while flight paths require you to hold down the jump button: Speed zones change up the rhythm of the song: Speed zones change up the rhythm of the song: - Over 30 levels across 6 different worlds, each with their own musical themes and gameplay mechanics

- "9-bit" chiptune soundtrack hand-crafted to match gameplay rhythms

- Adjustable latency calibration and audio scheduling for tight and responsive gameplay

- Use coins to unlock bonus stages, alternate character skins, and more extras

More detailsRhythm Quest is a labor of love from me, DDRKirby(ISQ). I'm handling 100% of the game, including the art, audio, coding, game design, and management. In particular, I'll be creating all of the backing music tracks for each level, using my trademark "9-bit" modern chiptune style. Being both the game designer and composer for a rhythm game allows me to create music tracks that are mapped out specifically to provide call-outs to obstacles in the game. This worked especially well for previous games of mine. The game is being built using Unity3D, and will be available on Windows/OSX/Linux, as well as Switch, iOS, Android (the 2-button control scheme lends itself quite well to mobile devices). The story so farI first started Rhythm Quest in late 2016 as a side project during a break between jobs, where I built out the core functionality that you see in the demo above. The game had promise and I was pretty happy with what I had built, but it ended up taking a backseat to employment and other projects...until now! I'm happy to report that I've quit my fulltime job and have dedicated myself to attempting to finish and publish Rhythm Quest. About the developerDDRKirby(ISQ) -- that's me -- is a 9-bit chiptune music artist and independent game developer. If you know of me, it's most likely through some of my more popular video game song rearrangements, such as my Katamari Damacy - Lonely Rolling Star remix or my Super Mario 64 - Koopa's Road remix. You can browse my full extensive music catalog on my Bandcamp site at https://ddrkirbyisq.bandcamp.com/. I'm also a veteran participant in the weekend-long "Ludum Dare" game jam, having made 25 jam games over the past 10 years. Some of my more notable entries include the music games Ripple Runner, Melody Muncher, and Samurai Shaver, which took 1st place overall in Ludum Dare 40. LinksRhythm Quest on Steam: https://store.steampowered.com/app/1635640/Rhythm_Quest/Rhythm Quest main website: https://rhythmquestgame.comMy main website: https://ddrkirby.comRhythm Quest discord server: https://discord.com/invite/w3paJPchmbTwitter: https://twitter.com/RhythmQuestGameAlso feel free to follow my generals: Twitter: https://twitter.com/ddrkirbyisqYouTube: https://www.youtube.com/ddrkirbyisqitch.io: https://ddrkirbyisq.itch.io/Patreon: https://www.patreon.com/ddrkirbyisqFacebook: https://www.facebook.com/DDRKirbyISQMusic |

|

|

|

« Last Edit: February 09, 2023, 04:30:27 PM by DDRKirby(ISQ) »

|

Logged

Logged

|

|

|

|

|

Silkworm

|

|

« Reply #1 on: May 25, 2021, 07:28:46 AM » |

|

Oh hey! I love your music, I'd play this just for that honestly even though I'm not much of a rhythm game player. With only two buttons it seems casual enough and perfect for mobile. Looks (and sounds of course) pretty nice, satisfying and juicy already. Good luck with this!

|

|

|

|

|

Logged

Logged

|

|

|

|

|

DDRKirby(ISQ)

|

|

« Reply #2 on: May 25, 2021, 02:36:56 PM » |

|

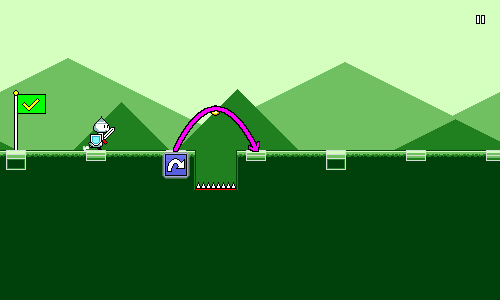

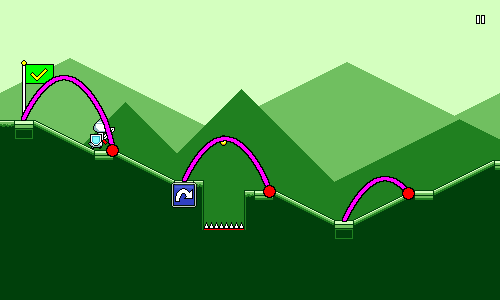

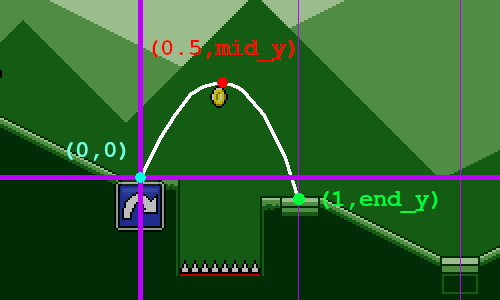

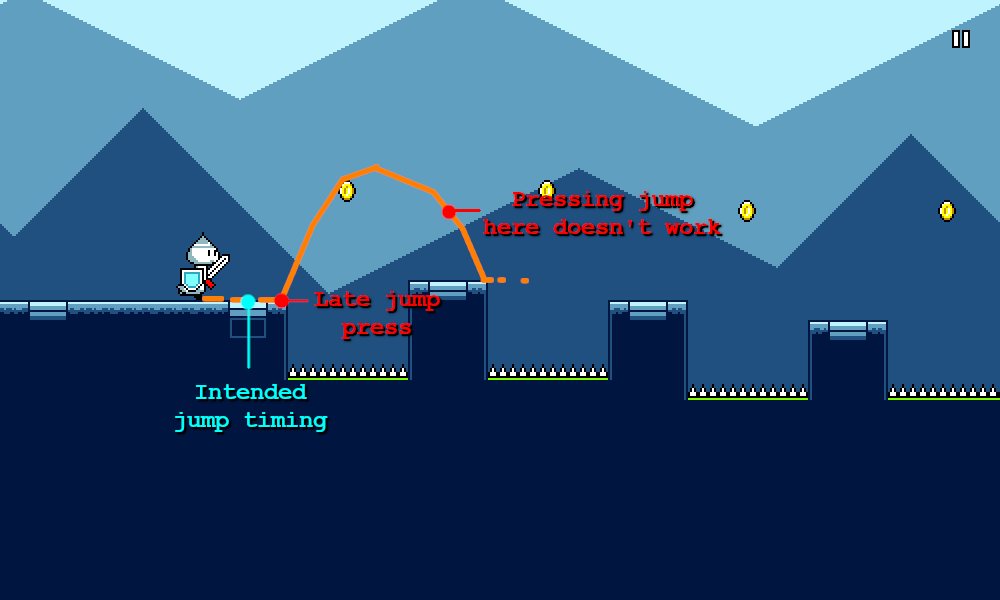

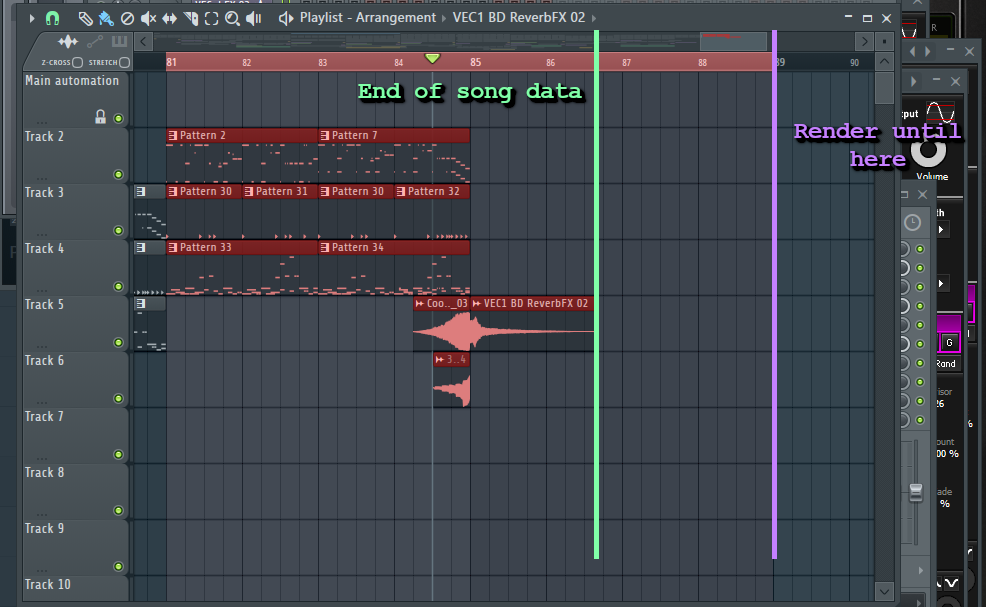

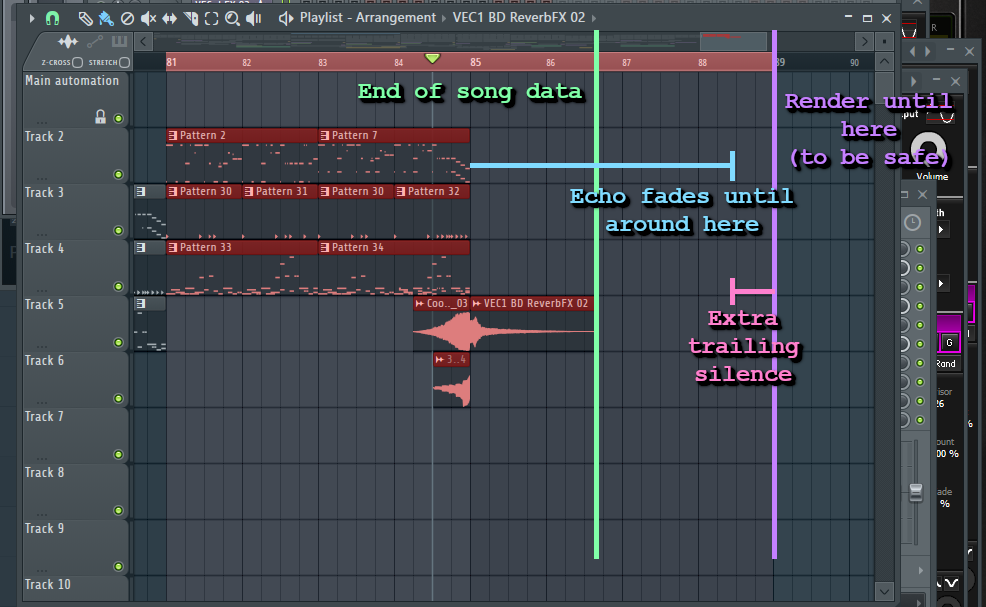

Jump ArcsI spent some time yesterday refactoring and refining the jump mechanics for Rhythm Quest and thought I'd write a bit about it, since it's more interesting than it might seem at first blush. In Rhythm Quest, each (normal) jump is roughly one beat in length. The exact horizontal distance varies, though, since the scroll rate is different for each song (and even within a song).  The naive approach The naive approachYour first instinct when implementing something like this might be to use simple platformer-style tracking of vertical velocity and acceleration: void Jump() {

yVelocity = kJumpVelocity;

}

void FrameUpdate(float deltaTime) {

yVelocity += kGravityConstant * deltaTime;

yPosition += yVelocity * deltaTime;

}

Here we're essentially using a "semi-implicit euler integration", where we modify the differential velocity and position at each timestep. Surprisingly, it's actually fairly important that we modify the velocity before the position and not the other way around! See https://gafferongames.com/post/integration_basics/ for more on this. There are a number of issues with this approach. Probably the biggest one is that the behavior is different depending on the framerate (deltaTime), which means that the jump will behave differently depending on the FPS of the game! You can fix that by using a fixed timestep to do this physics calculation instead of a variable timestep (Unity and Godot both provide a FixedUpdate physics processing step for this purpose). The other problem is one that's specific to Rhythm Quest... The problemSo after some algebra (or trial and error), we have our jump velocity and gravity figured out. The jump paths look something like this:  That's all fine and good, but when we add height changes into the mix, it's a different story:  The sloping ramps in Rhythm Quest aren't actually supposed to have any bearing on gameplay -- they're mostly there for visual interest and to accentuate the phrasing of the music (it looks a lot more interesting than just running across an entirely flat plain). But they're actually causing gameplay issues now, since they throw off the duration of each jump. It might not seem like much, but it can add up and cause mistakes, especially in sections like this:  The above gif looks perfectly-aligned though, because it's using a better and robust solution. How did I manage to dynamically alter the jumping behavior to match the changing platform heights?A more robust solutionThe first thing we need to do is throw out our ideas of having a predetermined/fixed jump arc, since that obviously didn't work. Instead, we're going to calculate each jump arc dynamically. No trial-and-error here, we're going to use quadratics! The fundamental equation for a parabolic / ballistic arc trajectory is given by y = ax^2 + bx + c. If we assume a start position of x = 0 and y = 0 (this would be the start of the jump), then we can simplify that to y = ax^2 + bx. In other words, if we know the two constants a and b, then we can just plug them into this equation and have a mapping between y and x which will trace out an arc. a here represents our "gravity" term and b represents our initial velocity. Since we have two unknowns (a and b), we can solve for them if we're given two nonzero points. In other words, we just need to pick two points along the path of the jump arc, and then we can solve the algebra and have a formula that we can use to calculate our y-coordinates. The whole idea of this exercise is to have the player land exactly on the target position at the end of the jump, so let's pencil that in as one of our points (shown here in green). To make our lives easier, we'll say that the x-coordinate at this point is 1:  Of course, in order for this to work, we need to know exactly what end_y is. We could try to calculate this using a raycast, but that wouldn't work if your jump "landing" position isn't actually on the ground (e.g. you mis-timed a jump and are going to fall into a pit!). Instead the way that this works is that I have a series of "ground points" that are generated on level initialization. These form a simple graph of the "ground height" of the level, minus any obstacles. This lets me easily query for the "ground height" at any x-coordinate by using linear interpolation. Conceptually it looks like this:  For our third point, let's just have that be in the middle of the jump horizontally.  There are a couple of different options we could use for determining mid_y, the height of this point. Here's what I ended up with after some twiddling around: // Some constant base jump height.

float midHeight = GameController.JumpHeight;

if (yDiff > 0.0f) {

// The end of the jump is higher than the beginning.

// Bias towards jumping higher since that looks more natural.

midHeight += yDiff * 0.5f;

} else {

// The end of the jump is lower than the beginning.

// Here I bias towards jumping lower, but not as much as above.

// It ends up looking a bit more natural this way.

midHeight += yDiff * 0.25f;

}

We have all of our variables and knowns, so let's actually do the math now! We have two equations that we get from plugging in our two points into the basic formula y = ax^2 + bx: mid_y = 0.25a + 0.5b // x = 0.5, y = mid_y

end_y = a + b // x = 1, y = end_y

This is extremely easy to solve -- just multiply the top equation by two and take the difference. In the end we get: a = 2 * end_y - 4 * mid_y

b = end_y - a

Now that we know a and b, we can store them and then use them to trace out the path of the arc! So to wrap it up, each time the player presses jump, we:- Store the beginning x-coordinate and y-coordinate

- Calculate a and b as shown above

- On subsequent frames, set y = ax^2 + bx + starting_y

The end result, once more:  This is way better than our naive solution from the beginning of the article. Not only does it work with varying heights, but we derived an exact equation to trace out our jump arc (no approximations!), which means we can just update the visual x and y coordinates in the rendering update instead of having to deal with physics-based timesteps. A few extra things I also ended up doing to make the jumps feel better:First, I made jumps actually last slightly shorter than one beat. This is because it looks more natural to have a short period where the character has definitively landed on the ground before the next jump. This also allows for some more leeway for timing consecutive jumps, and ensures that timing errors don't compound unfairly. I also allow for early jumps -- in other words, you can re-jump slightly before you actually touch the ground. This again helps with ensuring that timing errors don't compound, and is a nice quality-of-life improvement for players. In this case I make sure to "snap" your y-coordinate downwards at the beginning of the jump, so it still looks as if you ended up touching the ground (even though you didn't really).

|

|

|

|

|

Logged

Logged

|

|

|

|

|

DDRKirby(ISQ)

|

|

« Reply #3 on: June 07, 2021, 01:51:55 PM » |

|

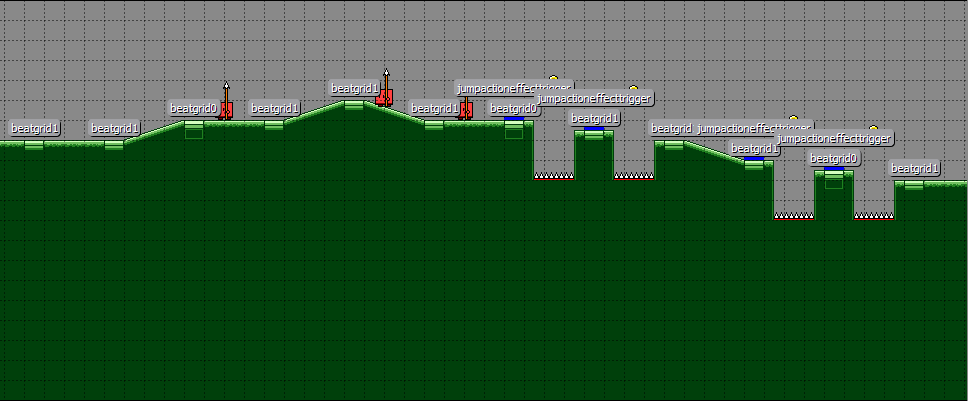

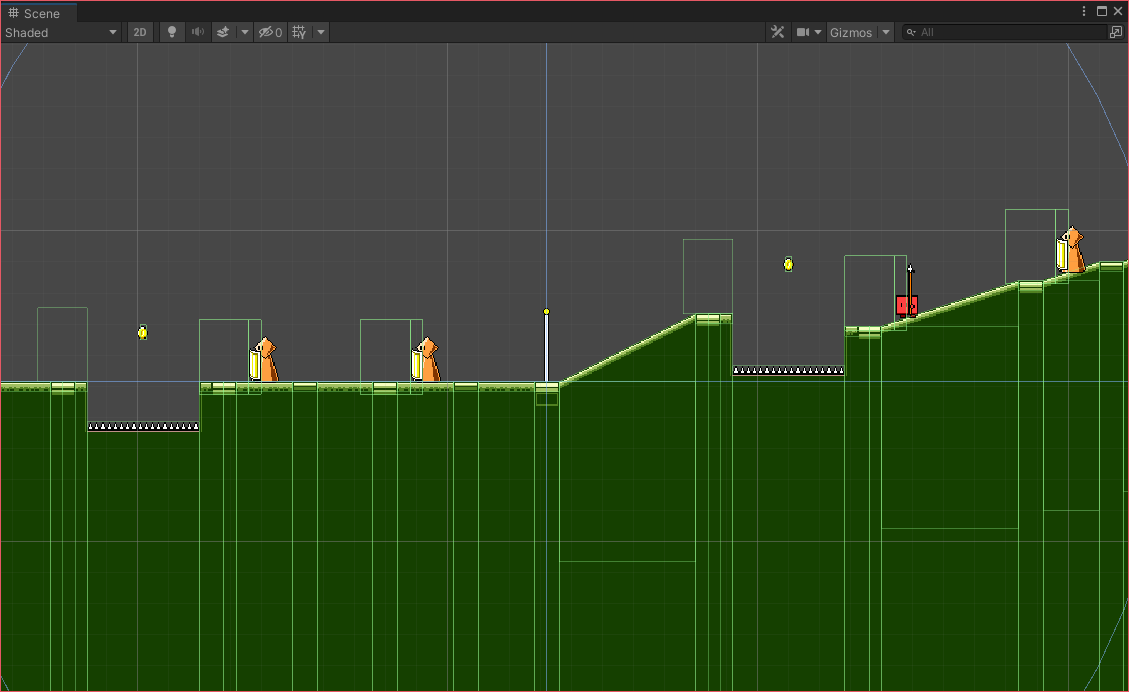

Flying Mechanic and Level GenerationAfter a bunch of work, I finally have a new core mechanic working in Rhythm Quest! This video showcases the flight mechanic, where you need to press and hold the jump button in order to traverse a flight path using a wing pickup. I should take this chance to explain briefly how level design works in Rhythm Quest. Wayyy back in the infancy of Rhythm Quest development, levels were built using the Tiled map editor ( https://www.mapeditor.org/). It looked something like this:  While this provided a nice visual representation of what the level looks like, it was severely limiting. The scrolling speed of the gameplay couldn't be adjusted at all, as it was locked to the tile width. In addition, it took ages to lay out all the tiles for the level, and iterating on the design was painful -- changing the ramp/height structure for one section affected the entire rest of the map, which was mess to deal with in the editor. Every single beat marker also needed to be placed by hand. It became clear pretty quickly that this wasn't going to be workable in the long run. Here's what a level looks like now: "level1-1",

new LevelData() {

PrettyName = "Level 1-1\nSunrise Sonata",

BeatsPerChar = 1.0f,

Obstacles =

"#... .... ..1. ..1. ..1. ..11" +

"#.^. ..^. ..^. ..^^" +

"#.<< ..11 ..-- ..11" +

"#.^1 ..^1 ..++ 1111" +

"#+.1 .+.1 .+.+ .111" +

"#<.1 .<.1 .111 1..." +

"#.+. ..-. ..-. ..--" +

"#.1. ..1. ..11 1...",

Height = "" +

".... .... .... .... .... ...." +

".... .... .... ...." +

".... .... .... ...." +

".c.. .c.. .C.. CCBB" +

"...c ...C .... .cC." +

"...c ...c .ccc ...." +

".C.. .c.. .c.. CC.." +

".C.. .c.. ..Cc ....",

Checkpoints = new []{

new CheckpointData { Hue = 0.0f, PixelsPerBeat = 80.0f, RespawnOffset = 8.0f },

new CheckpointData { Hue = 0.0f },

new CheckpointData { Hue = 30.0f },

new CheckpointData { Hue = 30.0f },

new CheckpointData { Hue = 240.0f, PixelsPerBeat = 140.0f },

new CheckpointData { Hue = 270.0f },

new CheckpointData { Hue = 150.0f, PixelsPerBeat = 110.0f },

new CheckpointData { Hue = 120.0f },

},

ExtraEvents = new []{

new LevelGenerator.EventData(10.0f, LevelGenerator.EventType.AttackTutorial),

new LevelGenerator.EventData(26.0f, LevelGenerator.EventType.JumpTutorial),

},

}

That's right, the level is just a bunch of simple string constants! There are two main strings that are relevant here: Obstacles and Height. Obstacles denotes the actual gameplay elements such as enemies and jumps. Each character denotes something specific, so for example the # symbol represents a checkpoint and the ^ symbol denotes a jump over a pit of spikes. 1 and 2 represent single and double hit enemies, respectively. A period (.) represents a beat with no obstacle (whitespace is ignored and is used for organization only). Each character represents 1 beat by default, but this can be changed on a per-level basis. Height denotes the slopes/ramps going up and down over the course of the level (this is for the most part purely for visual interest and variation). This has its own separate string since it's often the case that ramps and obstacles happen at the same time. The LevelGenerator script is responsible for taking these instructions and actually creating the entire level programmatically via instantiating and initializing various objects (prefabs). In the end, the level looks something like this in Unity's scene view:  As you can see, the ground is actually made of a ton of different segments/pieces. I'm making heavy use of Unity's sprite tiling functionality here to create blocks of arbitrary width and height, so I just need to provide a set of about 9 different graphics/prefabs and then the level generator can build everything out of those. This level generation process is one of the main things that I'll be working on over the next month or two as I strive to add additional core (and side) mechanics to the game. The flying mechanic shown earlier, for example, involved adding a bunch of code for generating the waypoints in the flight path as well as the spike segments below. Some of my future mechanic ideas will also probably involve major refactors to this code, to support things like triplet meters, more complicated obstacle combinations, etc. While there's some additional up-front cost involved in designing out the level generation system programmatically like this, the ability to iterate and build out levels quickly more than makes up for it in the end.

|

|

|

|

|

Logged

Logged

|

|

|

|

|

DDRKirby(ISQ)

|

|

« Reply #4 on: June 11, 2021, 02:51:57 PM » |

|

Music/Game synchronizationToday I'm going to be taking an initial look at Rhythm Runner's music synchronization code, both as an informative technical log and also as a way to refresh my memory of the system that I've ended up building. There's a LOT of under-the-hood logic that goes on in trying to keep everything working properly, so there will probably be some more followup posts about music sync. For now, let's try to cover the basics (synchronizing gameplay to a backing music track). BackgroundThe art of synchronizing music to gameplay is a bit of an arcane one, with numerous compounding factors and difficulties interfering with what seems like a simple concept. As much as you'd like to just "play the music and start the game at the same time", audio buffers, DSP timing, input latency, display lag, timing drift, and other things need to be considered in order to have a robust approach. The exact nature of these problems and their corresponding solutions are also engine-specific most of the time. Regardless, here is a brief but nonextensive list of the things that can go wrong when using a naive latency-unaware approach to music/gameplay synchronization: Audio scheduling - Telling an audio clip to play doesn't send audio data to the hardware instantaneously. The audio needs to be loaded, scheduled, mixed, etc. Audio buffering - Audio is mixed using circular buffers and the exact size of these buffers adds some amount of latency to audio output. Frame-based timing - Input/audio/timing is often handled on a per-frame basis, even if the actual events happened between frames. Input latency - There may be some amount of input latency, either hardware or (more likely) from the game engine, particularly for touch-based inputs. Visual/display latency - There's some amount of delay before a visual update can actually be seen on the screen (vsync, monitor lag, etc.) Player - Players can "expect" certain amounts of latencies due to previous experience with other games Etc... I'd highly recommend studying the notes of Exceed7 Experiments (https://exceed7.com/native-audio/rhythm-game-crash-course/index.html) on this subject if you're unfamiliar with thinking about these sorts of concepts.A Naive ApproachAn initial stab at creating music/gameplay synchronization might be to do something like this: void Update() {

player.transform.position.x = audioSource.time * SOME_SPEED_CONSTANT;

}

This works great for a quick-and-dirty prototype (it's essentially what I did for the initial version of Ripple Runner way back in the day...), but unfortunately, there are a couple of problems here: First, this isn't latency aware, so all of the problems that are listed above apply. audioSource.time tells you "the playback position of the clip from the point of view of the engine", but this doesn't take into account all of the additional processing steps that happen later down the audio processing chain. Second, audioSource.time doesn't actually update smoothly between frames. So you may get odd results like audioSource.time reading the same value in two consecutive frames, or changing by twice as much during one frame as the next, which results in stuttery movement. Fundamentally this is due to the audio system running on an entirely different timeline than normal "game time", but also because this value is based on the start of the current audio buffer, not the current playback position. Using PlayScheduledUnity exposes an AudioSettings.dspTime value which will return the current time from the audio system's point of view. From here on out I'll refer to this as "DSP Time", as opposed to "Game Time" which is the frame-based timing that you'd get from Unity's Time.unscaledTime value. If you ever get confused, remember that for the most part, doubles are DSP time and floats are game time. We can use Unity's AudioSource.PlayScheduled function in order to schedule our backing track in advance at a particular Audio DSP time. Given enough of a scheduling window (I use a full second, which should be plenty), this guarantees that the audio track will consistently start playing exactly at that Audio DSP time (though of course, there will still be latency...). We call this schedule point _audioDspStartTime. This represents the DSP Time at which the music track first starts playing. void Start() {

_audioDspStartTime = AudioSettings.dspTime + kStartScheduleBuffer;

_musicSource.PlayScheduled(_audioDspStartTime);

}

Unfortunately, there's no "exact" way to map from a DSP timestamp to a game time value, since the two systems update at different intervals. However, we can get pretty close by using a linear regression. The Twitter thread at https://twitter.com/FreyaHolmer/status/845954862403780609 contains a graph illustration of what this looks like, if that helps. I have an AudioDspTimeKeeper script that is responsible for managing this linear regression mapping throughout the application's lifetime: void Update() {

float currentGameTime = Time.realtimeSinceStartup;

double currentDspTime = AudioSettings.dspTime;

// Update our linear regression model by adding another data point.

UpdateLinearRegression(currentGameTime, currentDspTime);

}

Here, UpdateLinearRegression() is just a bunch of statistics math that uses the average, variance, and covariance to establish a linear regression model. I'm sure you can find an implementation in your favorite programming language if you search for it. Currently I keep a rolling average of 15 data points for this regression. The output of the linear regression model is a set of two coefficients which determines a line mapping, so we can then expose the following function: public double SmoothedDSPTime() {

return Time.unscaledTimeAsDouble * _coeff1 + _coeff2;

}

There's one more detail that needs to be addressed, which is that since our linear regression model is constantly being updated, we might get a little bit of jitter. That's fine, but we should make sure that our SmoothedDSPTime() function is monotonically increasing, otherwise there's a chance that the player might move backwards for a frame, which would probably break a lot of things: public double SmoothedDSPTime() {

double result = Time.unscaledTimeAsDouble * _coeff1 + _coeff2;

if (result > _currentSmoothedDSPTime) {

_currentSmoothedDSPTime = result;

}

return _currentSmoothedDSPTime;

}

We now have an AudioDspTimeKeeper.SmoothedDSPTime() function that will give us the (smoothed) current audio DSP time for the current frame. We can now use this as our timekeeping function, in conjunction with our _audioDspStartTime that we set when we first scheduled the backing music track: double GetCurrentTimeInSong() {

return AudioDspTimeKeeper.SmoothedDSPTime() - _audioDspStartTime;

}

And we can simply swap this into our naive approach: void Update() {

player.transform.position.x = GetCurrentTimeInSong() * SOME_SPEED_CONSTANT;

}

Adding latency compensation into the mix is actually really easy! We can add it here: double GetCurrentTimeInSong() {

return AudioDspTimeKeeper.SmoothedDSPTime() - _audioDspStartTime - _latencyAdjustment;

}

So for example, with a latency adjustment of 0.05 (50 milliseconds), GetCurrentTimeInSong() will return a value 50 milliseconds lower than it would normally, which means that the player's position will be slightly to the left of where it otherwise would be. Of course, the hard part is determining what _latencyAdjusment should be, as this is extremely device-specific and will need to be determined via player calibration. But that's a subject for another time... Resynchronization FallbackIn theory and in practice, everything above works just great. ...as long as nothing goes wrong. However, the system is a little brittle, as it depends on a single reference point for song progress (this is our _audioDspStartTime value). Usually this is fine, but there are a number of things that could cause the audio playback to stutter and become misaligned with what should actually be playing: Audio buffer underruns if the audio buffer size is too low to mix everything in time Some sort of CPU spike which causes the application to lag The application could be forcibly paused -- for example, this happens when dragging/resizing applications around the desktop in Windows. The application could be minimized, or even backgrounded on a mobile device etc... As a sanity check, I check our smoothed DSP time against the value from AudioSource.time. As mentioned earlier, we should never use this value directly in our game calculations due to jitter, but it still provides a nice sanity check in case something went wrong. void CheckForDrift() {

double timeFromDSP = AudioDspTimeKeeper.SmoothedDSPTime() - _audioDspStartTime;

double timeFromAudioSource = _musicSource.time;

double drift = timeFromDSP - timeFromAudioSource;

if (Mathf.Abs(drift) > kTimingDriftMargin) {

Debug.LogWarningFormat("Music drift of {0} detected, resyncing!", musicDrift);

_audioDspStartTime += musicDrift;

}

}

Currently I have kTimingDriftMargin at 50 milliseconds, which doesn't seem to trigger unless something actually did go wrong. Unfortunately, this "resync correction" won't be as good or consistent as the original synchronization, but hopefully this won't be needed very often and is just a failsafe. In the future, I'll probably need to add additional fallbacks here, in case for example the audioSource stops playing entirely for some reason, or never starts even though PlayScheduled was called. This is currently a TODO item for me! Phew! Apologies for the lack of images in this post, but unfortunately animated gifs don't really help when trying to show off audio synchronization and I don't happen to have any fancy diagrams on hand to help explain this stuff. :x Hopefully this all made some amount of sense regardless! If not, again I would highly recommend reading Exceed7's work on this (see https://exceed7.com/native-audio/rhythm-game-crash-course/backing-track.html for the post on backing track synchronization) for a more detailed explanation.

|

|

|

|

|

Logged

Logged

|

|

|

|

|

Jasmine

|

|

« Reply #5 on: June 11, 2021, 06:56:31 PM » |

|

This is an awesome concept!

|

|

|

|

|

Logged

Logged

|

|

|

|

|

DDRKirby(ISQ)

|

|

« Reply #6 on: June 18, 2021, 02:58:06 PM » |

|

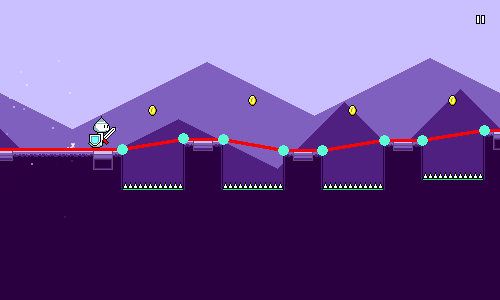

Water/Air Jump PrototypingNo technical deep dive this week -- instead I just wanted to post a video showing off some new mechanics that I've been prototyping. The first new mechanic is "water sections" where the scroll rate of the song slows down:  I haven't completely decided how to use this yet. In the video above I've done some playing around with making these sections feature triplet meter as a way of making the rhythms more interesting, but I'm not too sure I'll stick to that as most of my other mechanics aren't built for it and it doesn't seem to have a ton of depth. Perhaps that might be better as a separate type of enemy? So right now it's mainly just an aesthetic thing that changes the feel of that section of music. It also does slows down your jump speed, as that seemed to fit nicely, so jumps are two beats instead of one. The key is going to be building the music in a way that makes sense with these sections (halftempo drums, pitch shifting, etc). This will probably be more of a one-world gimmick than a mainstay mechanic for the entire game. Visually I've reused the water shader from level 1-3, which uses simple sine wave patterns to offset the drawn texture. For capturing the input to the shader, you could use a GrabPass, but I instead chose to implement this by using a Render Texture. For each underwater section, there's a separate camera which grabs what would be shown on the screen and writes it to a Render Texture. There's then a simple rectangular quad Mesh Renderer that draws the Render Texture on top of everything else using my water shader. (Of course, this quad should to be invisible to the water camera) It's important that the quad is drawn above everything else, but unfortunately, Unity doesn't actually expose the 2D sorting order of the Mesh Renderer component in the inspector. Luckily, you can expose it using an editor script: see https://gist.github.com/pavel-fadrhonc/ecbe4ff18e1a4a38a62214bbab69a0e2There's some other things that I could do to get this looking nicer (bubble particles, color tweaks, better "splash" animations), but this is working fine for now. The next set of things that I've been working on is mid-air jumps and the ability to support various complex combinations of jumping and flying. The mid-air jumps were simpler to implement than the press-and-hold flight paths, and I actually had them working a while ago, but the code was pretty old and needed to be brought back up to speed to function properly again.  This is where you can really see the rhythms start to get more complex. Obviously I wouldn't throw players into this sort of dense pattern right off the bat; these sequences were designed to test my level generation code more than anything else. Getting all of this working involved a bunch of changes to the level generation code, as there were previously a lot of assumptions being made there that are no longer true with these combinations. I'll have to continue to clean this up in the future as well -- it's an ongoing process as I add in more features and learn more about the best way to structure things. Fortunately, it seems to be in pretty decent shape so far.

|

|

|

|

|

Logged

Logged

|

|

|

|

|

DDRKirby(ISQ)

|

|

« Reply #7 on: June 25, 2021, 02:19:03 PM » |

|

Spike/Teleport Enemies DemoThis week I continued mostly working on new mechanic prototyping. I've got a couple more new things to show off! First up we've got these new teleporting ghost enemies:  Each one of these takes three hits to destroy and currently uses a dotted-quarter-note rhythm to provide some syncopation. I can potentially switch this rhythm per-level, but I think the three dotted-quarter-note hits probably work better than anything else so I don't really see a need to do that. I wanted another attack-based mechanic and thought this might be a good one as it makes reading rhythms a bit less obvious. The double-hit enemies play around in a similar space, but this one is a bit more complicated as it can be interleaved with other obstacles. Part of the charm of making a platformer/rhythm hybrid is that you can make the rhythms harder to "translate" than with traditional music games, so your brain has to work a little more to parse them out. My other game Melody Muncher plays with this in the same way. There's a neat little after-image "teleport" effect that's used between hits. Here's what that looks like slowed down:  Implementation-wise this is actually pretty simple. The enemy object moves at a fast rate towards the destination (actually, the hitboxes and main entity teleport there instantly; it's just the visuals that lag behind), and as it does so, it emits particles. The yellow star particles are pretty obvious -- they just get created with a random outward velocity and fade out quickly. However, the "after-images" are also actually particles as well -- they just happen to not have any motion, so they just appear and then fade out while remaining in place. (and they of course are sized to be the same as the actual main sprite) Intuitively, you could implement this by simply attaching a particle emitter to the enemy and then toggling it on and off. I actually didn't do that -- I chose instead to just have a global particle emitter that's shared across all of the enemies, and then each enemy just tells that global emitter to emit particles at the appropriate locations. This is most likely (?) better for performance, though it probably doesn't matter a ton here. As a final touch you might notice that the main enemy sprite flashes as it travels. This is done very simply using a shader that inverts the color of the sprite. Something like this: fixed4 frag(v2f IN) : SV_Target

{

fixed4 texcol = SampleSpriteTexture (IN.texcoord) * IN.color;

if (_Invert) {

texcol.rgb = float3(1.0, 1.0, 1.0) - texcol.rgb;

}

// ...

return texcol;

}

The "_Invert" flag here uses Unity's "[PerRendererData]" construct along with "MaterialPropertyBlock"s so that it can be modified per instance whilst still using a single shared material. Feel free to read up on those concepts elsewhere if that all sounds foreign to you. Next up we have these fun little rolling spike enemies:  These were actually incredibly easy to code up. The entirety of the SpikeEnemy script looks like this: public class SpikeEnemy : MonoBehaviour

{

[NonSerialized]

public float Beat; // This gets set during level generation.

protected virtual void Update() {

float currentBeat = MusicController.CurrentBeat();

float x = LevelController.Stats.BeatToX(Beat + 0.5f * (Beat - currentBeat));

float y = LevelController.Stats.XToGroundY.Get(x);

transform.position = new Vector3(x, y, 0.0f);

}

}

That's it! The actual interaction is done by simply affixing a "killzone" collider to it -- I already have logic setup so that any collider that is in the "Death" layer ends up triggering a respawn instantly on contact. LevelController.Stats is the bookkeeping singleton that gets populated with all sorts of different lookup/conversion functions and tracking data during the level generation process. For example, converting between x-coordinates and music beats, or looking up the height of the "ground" at a given x-coordinate, or what the player's y-coordinate is supposed to be at a given x-coordinate. The only real tricky part here is the calculation of the x-coordinate, which uses a funny Beat + 0.5f * (Beat - currentBeat) calculation. This is essentially just making it so that when currentBeat = Beat, we just use BeatToX(Beat) which places the enemy at the appropriate x-coordinate. And outside of that, the 0.5f * currentBeat factor means that the enemy will travel to the left at 50% the speed of the song. (100% would be too fast to react to!) One other thing I showed off in the video is the seamless respawn procedure, which gives you a "rewind, cue in" when you restart a section, all to the beat:  I could easily write an entire article on how this is done (though I've forgotten about a lot of the details since I implemented it ~2 years ago), but essentially, the game is always playing a seamless background loop that's perfectly in sync with the main music track -- except it's muted during normal gameplay. When you respawn, we mute the normal track and unmute the background track. (Since they're both in sync, the beatmatching is preserved) Then it's just a matter of calculating the next downbeat at which we can drop the player into the checkpoint. We then figure out the timing and scheduling for rewinding the music position to the appropriate point, reschedule audio playback, and set up the appropriate tweens. That's all super complicated to actually achieve in practice, but I'm going to just handwave it all away at the moment as I don't want to get into it right now. But it's all very slick when it comes together and really lets you stay in the action across respawns. The last thing I wanted to point out today is more of a minor change. There's now a "cloud poof" animation for air jumps, as well as a quick _Invert flash, which is meant to serve as a more obvious/flashy visual confirmation for doing air jumps and flying:  Phew -- that's all for this week!

|

|

|

|

|

Logged

Logged

|

|

|

|

|

DDRKirby(ISQ)

|

|

« Reply #8 on: July 02, 2021, 02:49:47 PM » |

|

Build PipelinesI didn't work on anything exciting this past week (menu handling, accessibility, localization, fonts...), so I thought that I'd instead do a brief run-down of the build pipelines for Rhythm Quest. Build and deploy flows are always changing (for better and worse...) so the steps here might become outdated at some point, but perhaps it'll serve as a useful starting point for someone else thinking about how to make builds and deploys more convenient. PremiseThe first thing you should understand is how painful it is to execute and deploy a game manually, without any sort of build automation or scripting. Here are the rough steps needed in order to build and deploy a new build of a Unity game for iOS: Switch Unity platform to iOS (Wait a long time for assets to reimport...) Start the build from Unity -- an XCode project is created (after some time...) Open the XCode project and create a project archive (Wait a long time for the build to finish...) Upload the archive to the App Store Wait a long time for the archive to be uploaded and processed by Apple Login to App Store Connect, approve the new build and deploy to Testflight ...and that's just for ONE build platform. Rhythm Quest supports iOS, Android, Windows 32-bit, Windows 64-bit, Mac, and Linux, so just imagine going through a similar (but different!) set of steps for 6 different builds. It's clear that we need some sort of automation structure in order to make our lives easier. Unity Batch Mode The first improvement we can make is to allow Unity to make builds through the command-line. This is done by creating static methods that execute the build via BuildPipeline.BuildPlayer(), then using the -batchmode, -nographics, and -executeMethod flags to run Unity in batch mode and execute that build method. You can do a bunch of other logic in your build method while you're at it, for example reading the version number from a text file: string version = PlayerSettings.bundleVersion;

try {

using (TextReader reader = File.OpenText(Application.dataPath + "/Publishing/version.txt")) {

version = reader.ReadLine();

}

Debug.LogFormat("Using {0} as bundle version", version);

PlayerSettings.bundleVersion = version;

} catch {

Debug.LogErrorFormat("Unable to read bundle version from version.txt, using {0} as version", version);

}

Or using compile-time define flags depending on build parameters, useful for something like demo builds: string scriptingDefines = demo ? "DEMO" : "";

PlayerSettings.SetScriptingDefineSymbolsForGroup(BuildTargetGroup.Standalone, scriptingDefines);

PlayerSettings.SetScriptingDefineSymbolsForGroup(BuildTargetGroup.iOS, scriptingDefines);

PlayerSettings.SetScriptingDefineSymbolsForGroup(BuildTargetGroup.Android, scriptingDefines);

We can now run a Unity build via command-line, but there's still a ton of other steps that need to be done, like incrementing the bundle version number, building the XCode project, uploading to Testflight, etc. The best tool I've found to handle all of this stuff is fastlane ( https://docs.fastlane.tools/). Fastlane allows you to define different "lanes", each which will execute a given sequence of actions for your project. Here's what my iOS build lane looks like (excuse the hardcoded Unity path): lane :ios do

unity(

build_target: "iOS",

unity_path: "/Applications/Unity/Hub/Editor/2021.1.7f1/Unity.app/Contents/MacOS/Unity",

execute_method: "BuildScripts.Ios",

)

increment_build_number(

build_number: latest_testflight_build_number + 1,

xcodeproj: "./Builds/ios/Unity-iPhone.xcodeproj",

)

gym(

project: "./Builds/ios/Unity-iPhone.xcodeproj",

xcargs: "-allowProvisioningUpdates",

output_directory: "./Builds/ios",

)

end

The idea of defining a sequence of actions is pretty trivial -- you could just script that yourself if you wanted to. The real value in using fastlane is having access to the large repository of built-in functions for interfacing with the iOS and Android ecosystem. For example, the increment_build_number command is able to automatically bump the build number of an XCode project file, so you don't have to modify it manually. Even better, the latest_testflight_build_number + 1 snippet actually executes a separate command which logs in to Testflight, pulls down build info, and grabs the latest build number, then adds 1 to it. Fastlane also provides commands for deploying the packages once built: lane :deploy_ios_testing do

changelog = File.read("../Assets/Publishing/changelog.txt")

app_store_connect_api_key(

key_id: ********,

issuer_id: *******************************,

key_filepath: ***************,

)

upload_to_testflight(

ipa: "/Users/ddrkirbyisq/Coding/network-share/rhythm-quest/ios/latest/RhythmQuest.ipa",

distribute_external: true,

groups: "ExternalTesters",

changelog: changelog

)

end

No need to paste your changelog into App Store Connect manually -- fastlane can automatically read it from a file and take care of it for you! No need to wait until your build finishes processing -- fastlane can poll the App Store Connect backend and automatically trigger the distribution of the new build! Though I ran into a few minor issues with fastlane (Unity builds stalled when executed through fastlane on windows for some odd reason), for the most part it is well-documented and takes care of a lot of the annoying heavy lifting around Android/iOS deployment for you. JenkinsNow that we have nice fastlane/shell commands for doing builds and deployments, we can move onto managing our build pipelines via a more structured system. Jenkins ( https://www.jenkins.io/) is an open source automation server that's commonly used for this sort of thing. Normally in larger teams you'd host Jenkins on some sort of build machine that anyone can access remotely, but for my purposes I'm simply running it locally on my own machines and hitting it via localhost:8080. You could of course just manually run the build/deploy commands yourself, but using Jenkins provides a number of advantages over that approach: Ability to manage multiple workspaces -- important since Unity can't do multiple simultaneous builds in the same workspace Comes with built-in integration with git -- can auto-fetch the latest commit to build, deal with submodules, etc. Provides simple visual indications (or even email notifications) of build status/success Retains console log output so you can diagnose/debug when something went wrong etc... I actually have two separate instances of Jenkins running on different machines. This is an unfortunate necessity since there's currently no way to make all of the necessary builds using a single OS -- iOS/OSX builds can't be made from Windows and Windows builds can't be made from OSX. (At least there's a cross-compiler available for Linux, so I don't have to boot into a third OS...) If I was really ambitious I'd be able to manage everything through a single Jenkins instance and use one computer as the master and another as a slave, but I haven't bothered doing that since it didn't seem necessary. Past that, I have separate Jenkins subjobs setup for each build platform, and then a single master job that will trigger each of those subjobs. Right now I only have jobs set up for the build process, and not the deploy commands, since I like to run the deploy commands manually (they don't take as long, and this lets me double-check the builds before deploying). One issue I ran into was how to organize the builds for deployment, since some were on the Windows machine and others were build on the OSX machine. I needed to get them all in the same place so that I could for example deploy them all in a bundle to steam or itch.io. I ended up setting up a network share and having Jenkins copy the builds into the network share once each one was complete. Setting up the shared folder is a relatively straightforward process and doesn't require any special software -- it's built-in to the OS functionality (look under System Preferences -> Sharing -> File Sharing on OSX) MiscellaneousWhile fastlane has built-in commands for deploying to iOS and Android, I still had to take care of deployments for desktop builds to itch.io and Steam. itch.io has its own small command-line utility for pushing builds -- butler ( https://itch.io/docs/butler/). butler is very easy to use and simple to integrate into the pipeline as well. My fastlane deployment setup for itch.io is therefore very simple and just runs butler 4 times via shell, grabbing the version number from a text file: lane :deploy_itch do

sh('butler', 'push', '/Users/ddrkirbyisq/Coding/network-share/rhythm-quest/win32/latest', 'ddrkirbyisq/rhythm-quest:win32', '--userversion-file', '../Assets/Publishing/version.txt')

sh('butler', 'push', '/Users/ddrkirbyisq/Coding/network-share/rhythm-quest/win64/latest', 'ddrkirbyisq/rhythm-quest:win64', '--userversion-file', '../Assets/Publishing/version.txt')

sh('butler', 'push', '/Users/ddrkirbyisq/Coding/network-share/rhythm-quest/osx/latest', 'ddrkirbyisq/rhythm-quest:osx', '--userversion-file', '../Assets/Publishing/version.txt')

sh('butler', 'push', '/Users/ddrkirbyisq/Coding/network-share/rhythm-quest/linux/latest', 'ddrkirbyisq/rhythm-quest:linux', '--userversion-file', '../Assets/Publishing/version.txt')

end

Steam is a little less straightforward as you need to use the Steamworks SDK and set up a configuration file which tells Steam which directories to parse for the build for each build platform. This process is documented on the Steamworks site. I had a bit of trepidation as the OSX version of the command-line tools didn't seem to be as well documented (and I prefer to run most of the automation/scripting stuff on OSX), but I haven't really run into many issues there, so it's all working fine. SummaryIn the end, my entire setup and process looks like this: Two computers - one running Windows, one running OSX Each computer has a local Jenkins server Click a Jenkins button on the Windows box to start builds for Win32, Win64, Win64Demo Click a Jenkins button on the OSX box to start builds for Android, iOS, Linux, OSX Most of the platform-specific builds are run through fastlane A static Unity build method is invoked in batch mode to kick off the Unity part of the build fastlane takes care of updating version numbers, building XCode projects, etc. Builds are all copied to a shared network folder on the OSX machine when done Once I've verified the builds, I run deployments via fastlane commands Android and iOS deploys use commands built-in to fastlane itch.io deploys use butler Steam deploys use the steamworks command-line builder tool Setting up all of these build processes and pipelines was actually one of the first things that I did back in May when I picked up Rhythm Quest again. Part of it was because I knew it would be really important later down the road, but part of it was also just because I was interested in seeing how well this problem can be solved nowadays. If you were involved in app development years ago when Android and iOS were still in their infancy, you probably remember all sorts of horrors about having to manually deal with signing certificates, provisioning profiles, and all sorts of other such things. Tools like fastlane didn't exist at the time, so building out this sort of automated pipeline wasn't really even possible. The ability to run builds and deploys across multiple platforms by clicking a single button shouldn't be underestimated. (In fact, one of the reasons I'm moving away from Unity and towards Godot is the simple fact that Godot build times are orders of magnitude faster...)

|

|

|

|

|

Logged

Logged

|

|

|

|

|

DDRKirby(ISQ)

|

|

« Reply #9 on: July 25, 2021, 04:19:51 PM » |

|

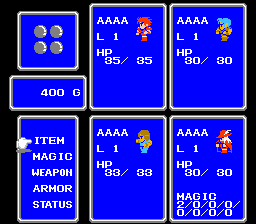

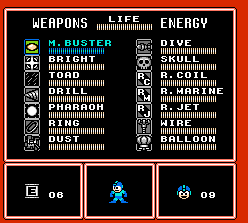

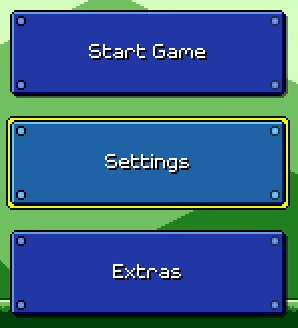

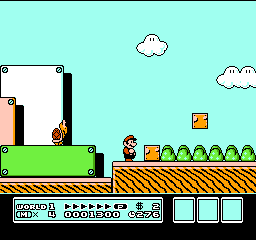

Menu ReworkSo I didn't have the most productive past ~two weeks, mostly due to extra stuff happening in my life. I decided to stop work on gameplay stuff (partly because I had exhausted my gameplay ideas for now), but I managed to get a lot of UI work done instead. A lot of this work isn't particularly glorious or exciting, but a lot of dull work often goes into making a game polished and robust. I did manage to work in some neat music reactivity, so I'll talk about that as well. Before and AfterHere's a video showing roughly what the menu looked like to start with: I hadn't spent a lot of time working on this, so it's understandably pretty basic. There's no keyboard navigation support, everything is super basic and bland, and there's tons of empty space everywhere. Now, here's a video of the menu in its new state: There's still some more work to be done here, but it's certainly come a long way! New Button GraphicsThe old buttons were designed a looooooong time ago and basically referenced the plain blue window style in some of the Final Fantasy games:   One issue here is the lack of contrast between the button background and the white font, especially for the "selected" variant of the button. That was easy to fix (just use a darker color...), but I also wanted to look at some other ideas for simple button shapes that looked a little less busy. At this time I was also trying to brainstorm ways to make the menu react to the music "somehow", without being too distracting. I knew the button graphics could play into this. I knew that I still wanted a pixel-art-styled button graphic, similar to something you'd see in a classic video game, so I looked around at a few different examples of rectangular windows and menus in older games:  In the end I decided to just draw out a simple flat (darker) blue shape, doing away with the distracting white border. I added some light shading and four circles on the corners as accents.  The four corner accents actually call back to these rectangular level structures from Super Mario Bros. 3:  Music Reactivity Music ReactivityOne of the main goals for this entire rework was to add some sort of music reactivity to the menu system. During the ~1 year period where I had stopped working on Rhythm Quest, I had been doing a lot of thinking about how to make an interesting and appealing menu system without involving a ton of work (i.e. beautiful art which I'm incapable of drawing). The answer ended up being to bring the "music-first" ethos of the gameplay into the rest of the game. For some interesting context, back in the day (around November 2017) the level selection screen was actually a full-fledged Super Mario World-like map scene:  This was pretty cool, and if my strengths were a bit different I would have explored this idea a little more, but it became apparent that trying to do this sort of art wasn't really scalable for me, at least not in this form. More importantly, the map just wasn't...interesting enough. It wasn't bad, but it wasn't really super exciting either. I realized here that I really wanted some sort of music-reactive map. I probably could have explored some sort of Super Mario Bros. 3 style graphics with simple animations that synced to the beat:  ...but in the end I decided to not pursue this idea and scrapped it in favor of a simpler (but hopefully more effective) menu screen. Beat SyncFortunately, music synchronization was already more or less problem for me at this point. See Devlog #4 for an explanation of how that works. I haven't explained how to tie it to the actual beat of the music, but that's not too hard: float GetIntensity(float offset, float patternLength) {

// (Gets the current time, then converts from time to beat)

float beatOffset = MusicController.CurrentBeat();

// Wrap based on beat pattern length and take the difference from our target.

// (note: the % operator will give negative values in C#, so I use a wrapper)

beatOffset = Utils.ModPositive(beatOffset - offset, patternLength);

// Normalize to 0-1 based on duration of the pulse.

float pulseProgress = Mathf.Clamp01(beatTime / _pulseDuration);

// Apply some easing (ease out quad):

pulseProgress = 1.0f - (1.0f - progress) * (1.0f - progress);

// Invert so that we go from 1 to 0 instead of 0 to 1.

return 1.0f - pulseProgress;

}

So now, making the beat-matched pulsing effects was pretty simple. I made a generic script which can modulate either the opacity or the scale of a graphic based on a given beat pattern, and then applied it to various graphics that were overlaid on top of the base button sprite:  I wanted each separate menu screen (main menu, settings, level select) to potentially have its own music, so I store these beat patterns in the controller script for each menu -- the button graphics find their parent menu on scene initialization. There's also some spinning visual effects that come in during the second half of each musical phrase: https://www.youtube.com/watch?v=HbVu_GrsE28Same idea, but instead of modulating an existing graphic, I just spawn a visual effect at one of the defined points (the effect takes care of deleting itself once finished). These are actually just the same as this visual effect from the main game, which is made by taking two colored squares and rotating them in opposite direction while fading out:  If you look closely, you'll notice that the spinning of the squares slows down as they fade out. This is done by using an aggressive easing function: easeOutQuint. If you're handling the rotation yourself, you can reference the helpful easings.net website to lookup these easing functions, but I happen to be using the LeanTween tweening library, which lets you apply these quite easily: LeanTween.rotateAroundLocal(

gameObject,

Vector3.forward,

_rotationDegrees,

_beatDuration * beatLength

).setEaseOutQuint();

Transitions between menu screens are done via a simple slide animation. As I mentioned earlier, different menus can also have different background music loops: https://www.youtube.com/watch?v=4ZxAP16XrOEThe naive way to implement this would have been to simply crossfade between the two pieces of music during the transition animation. However, I wanted to go a step further, since I had a big intention to try and push the "music first" ideology to as many little elements as possible. There's all sorts of possibilities for how to handle this. For example, you could predefine set "transition points" in the music (at the end of every measure, for example). Then, when the button is clicked, you can schedule a musical transition at the next transition point, and wait until that happens to execute the slide animation. The problem with this is that adding this sort of delay to the UI interaction fels really annoying and slow. I played around with a variant of this idea a little bit, but in the end decided that it was best if I had the transition always start immediately. I could still, however, modify the speed and duration of the transition to make it line up with the downbeat in a pleasing way. To do this, we can simply take the current (estimated) playback time and then calculate the time of the next downbeat: // (Note that this time will never be "exact" since AudioSettings.dspTime runs on a separate timeline)

float currentTime = (float)(AudioSettings.dspTime - _audioDspStartTime);

// (Simple conversion that uses the BPM of the song)

float currentBeat = _song.TimeToBeat(currentTime);

// Find the next downbeat.

float transitionEndBeat = Mathf.CeilToInt(currentBeat);

float transitionEndTime = _song.BeatToTime(transitionEndBeat)

float transitionDuration = transitionEndTime - currentTime;

That's pretty much the basic idea, but there's a problem. If the transition happens to start just before a downbeat (e.g. currentBeat = 0.9), then the transition will be jarringly fast. To fix that we can simply add a minimum buffer time so that the subsequent downbeat will be used instead. (This will also help with audio scheduling latency) // We could add the buffer in terms of beats or in terms of seconds.

// Either way is equivalent here since the entire main menu (currently) has constant BPM.

float transitionEndBeat = Mathf.CeilToInt(currentBeat + 0.5f);

In addition to the slide animation, a couple of different things happen with the audio/music during this transition: - A transition sweep sfx starts playing immediately at the start of the transition - The new music loop needs to be scheduled to kick in at the end of the transition - I also schedule a "landing" impact sfx at the end of the transition - The old music loop needs to be stopped at the end of the transition - The transition sweep sfx fades out quickly during the last sixteenth note of the transition (quarter of a beat) At one point I played around with using different transition sweep sfx samples for different-length transitions, but in the end I realized that it was easier and more effective to just use one long sample and then fade it out dynamically based on the transition timing. // Calculate transition "fade start" time, when we want to start fading the sweep sfx.

float transitionFadeTime = _song.BeatToTime(transitionEndBeat - 0.25f);

float fadeDuration = _song.BeatToTime(0.25f);

// Play the transition sweep sfx immediately. Retain a handle to the AudioSource so we can fade it.

AudioSource sweepAudio = AudioManager.PlaySound(_sweepSfx);

// Schedule landing sfx for end of transition.

AudioManager.PlayScheduled(_transitionEndSfx, _audioDspStartTime + transitionEndTime);

// Schedule new music loop for end of transition.

// We need to queue it up at the appropriate offset first!

_audioSources[newMusicIndex].time = transitionEndTime % _audioSources[newMusicIndex].clip.length;

_audioSources[newMusicIndex].PlayScheduled(_audioDspStartTime + transitionEndTime);

// Loop while transition is happening...

while (AudioSettings.dspTime < _audioDspStartTime + transitionEndTime) {

// How far are we through the fade section?

float timeWithinFade = AudioSettings.dspTime - _audioDspStartTime - transitionFadeTime;

float fadeProgress = Mathf.Clamp01(timeWithinFade / fadeDuration);

// I use an exponent to affect the easing on the fade.

// An exponent of 0.25 makes the fade happen more on the tail end (ease in).

sweepSource.volume = Mathf.Pow(1.0f - fadeProgress, 0.25f);

yield return new WaitForEndOfFrame();

}

sweepSource.Stop();

// Transition should be done now. Stop the old music loop.

_audioSources[oldMusicIndex].Stop();

A couple of notes to mention about the above. First, Unity currently doesn't have any sort of "StopScheduled" functionality that will allow you to stop playback of an AudioSource given a precise Audio DSP timestamp, so the best we can do is guesstimate based on checking the time each frame. Secondly, this method of doing audio volume fades is actually non-ideal as it's not sample accurate at all; the volume of the AudioSource is only modified once per visual update, which only happens about 60 times a second as opposed to ~44,000 times a second as it should be. Again, Unity doesn't seem to provide a good way to handle this, so we're stuck with this solution, though fortunately it ends up being "good enough" for the human ear. Other StuffThat's about all that I'll cover here, but I want to stress that there is a ton of other miscellaneous work involved here that I haven't even talked about. Very briefly, this includes things such as: - Allowing for menu navigation with keyboard, mouse, gamepad, OR touch input - Smartly handling button auto-selection depending on input device (if using keyboard/gamepad, the first option should be highlighted, otherwise not) - Supporting localization for all text in the menus, including dynamic text - Supporting screen readers so that visually impaired persons can navigate the menu - Disallowing menu input while a transition is happening - Remembering previous menu selection (returning to a previous menu should preserve the selection state) - Allowing for the menu scene to be loaded to a certain state (i.e. when returning from a level, it should have that level preselected) - etc... Unity does its best to help you with some of this -- it already provides an automatic menu navigation mapping system, and its new Input System provides (somewhat obtuse at times) means for automatically switching and detecting input devices. There's even a 3rd-party plugin which does most of the legwork for you for integrating with screenreader interfaces. But in the end there's still a lot of work (which will unfortunately go unnoticed by most) that needs to be put in in order to make something that Just Does The Right Thing (tm)...

|

|

|

|

|

Logged

Logged

|

|

|

|

|

DDRKirby(ISQ)

|

|

« Reply #10 on: July 30, 2021, 05:12:50 PM » |

|

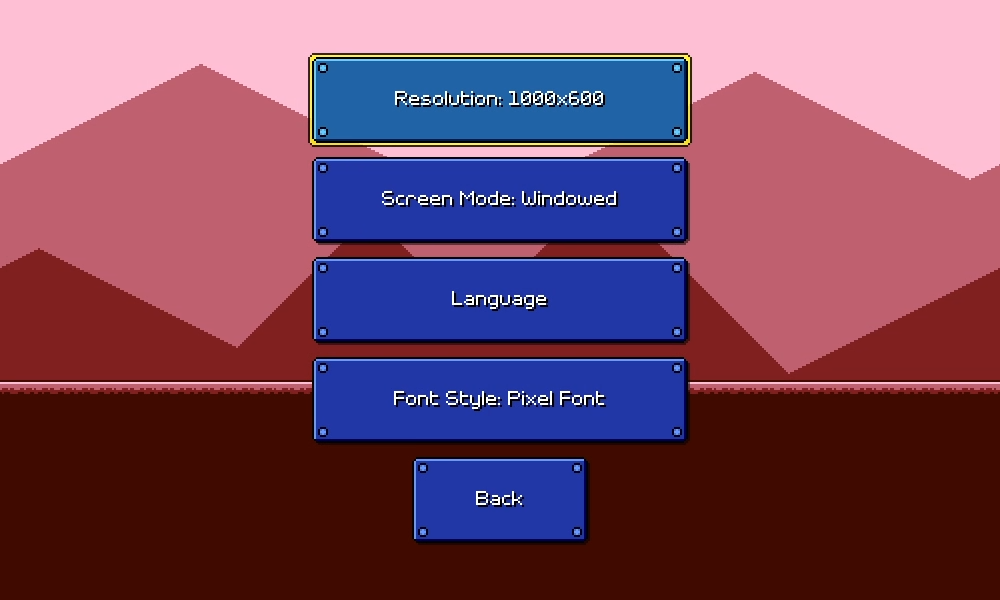

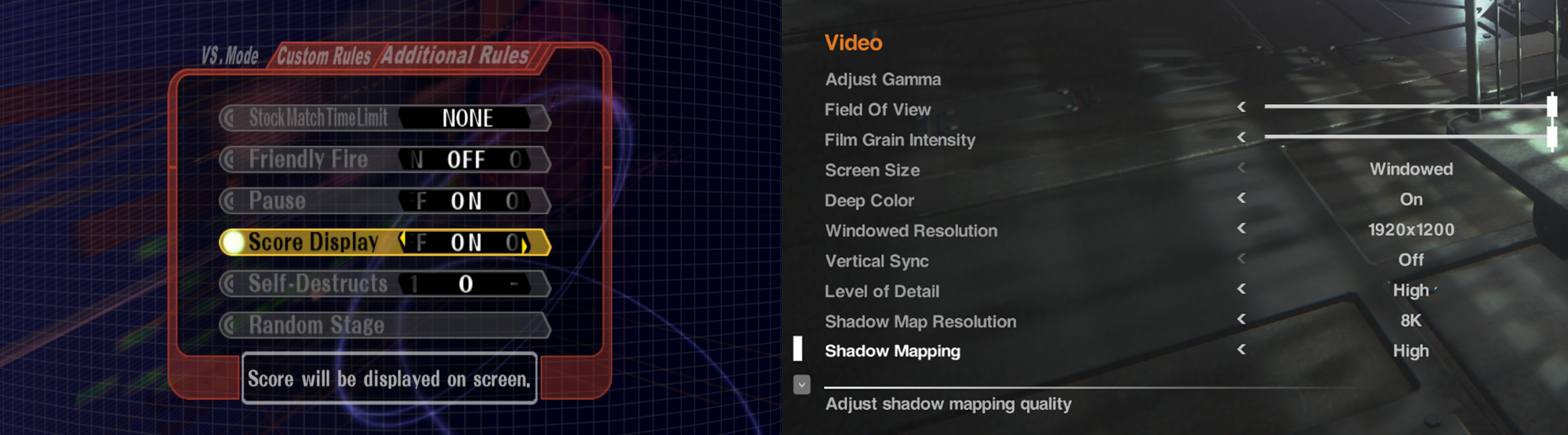

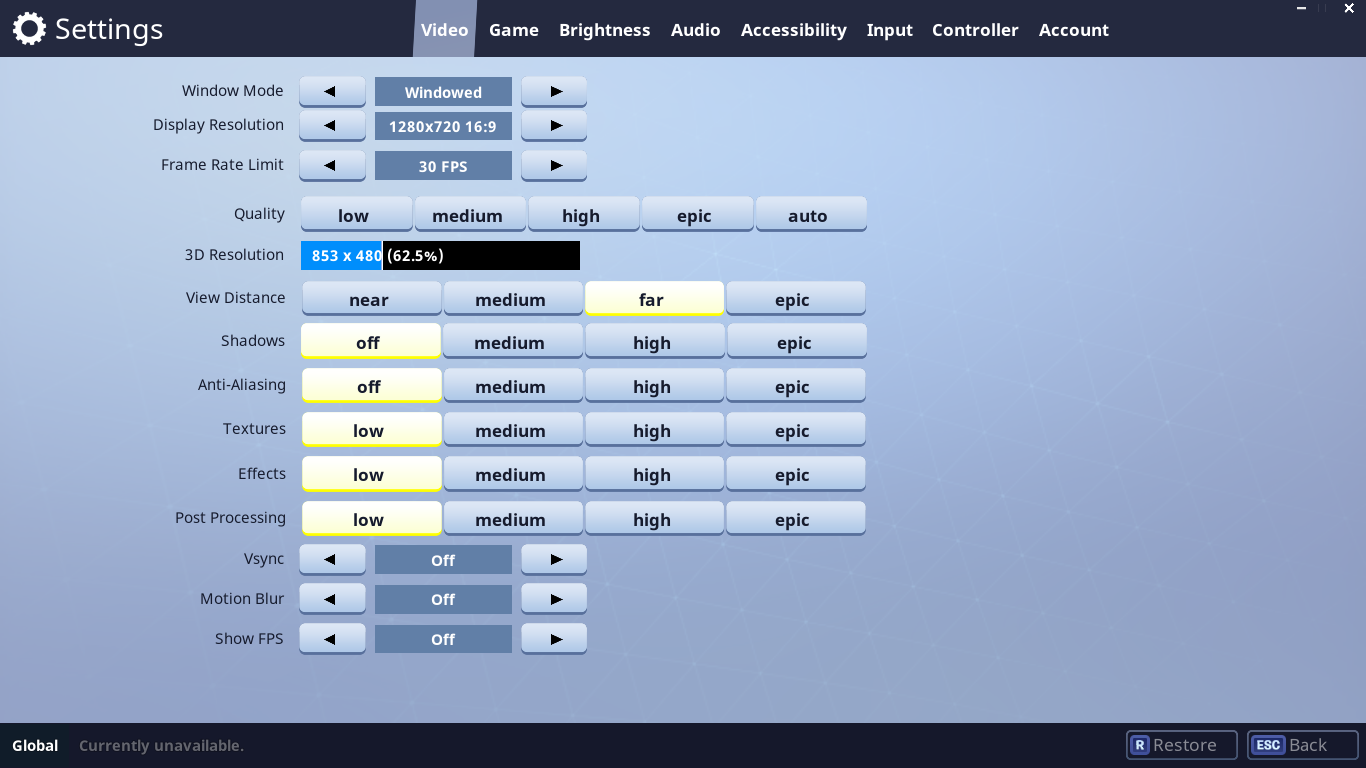

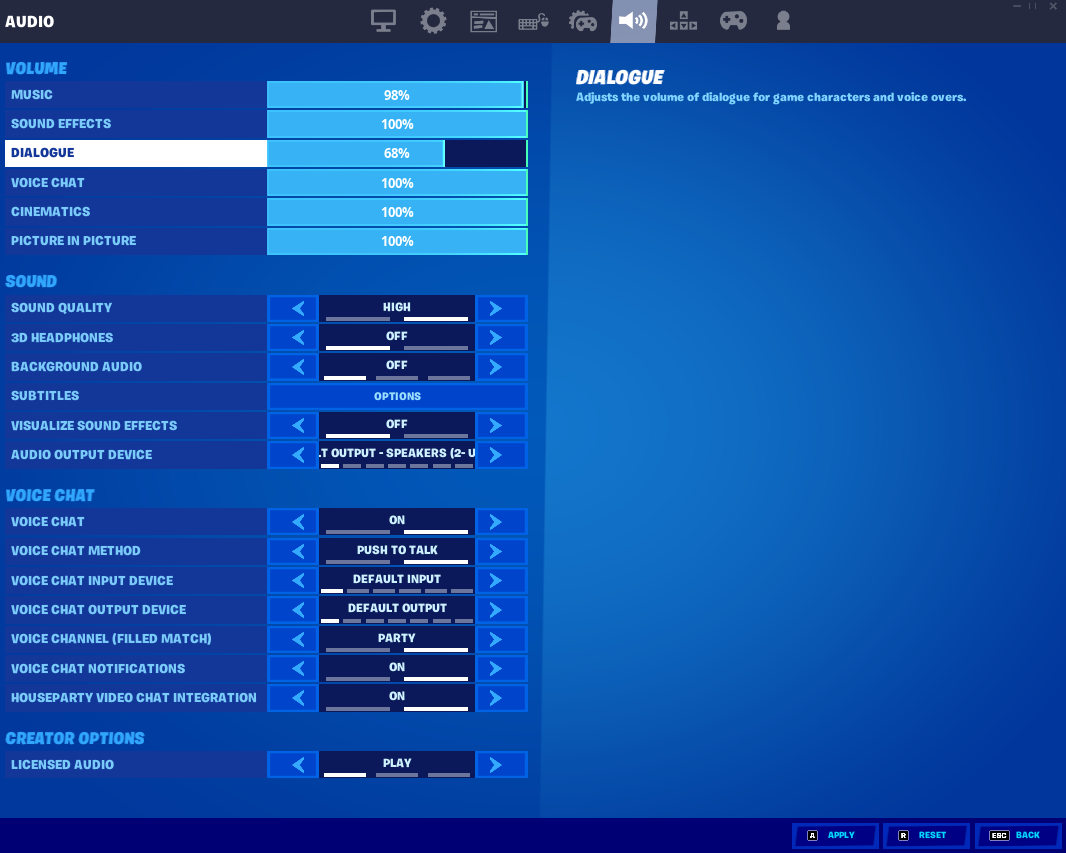

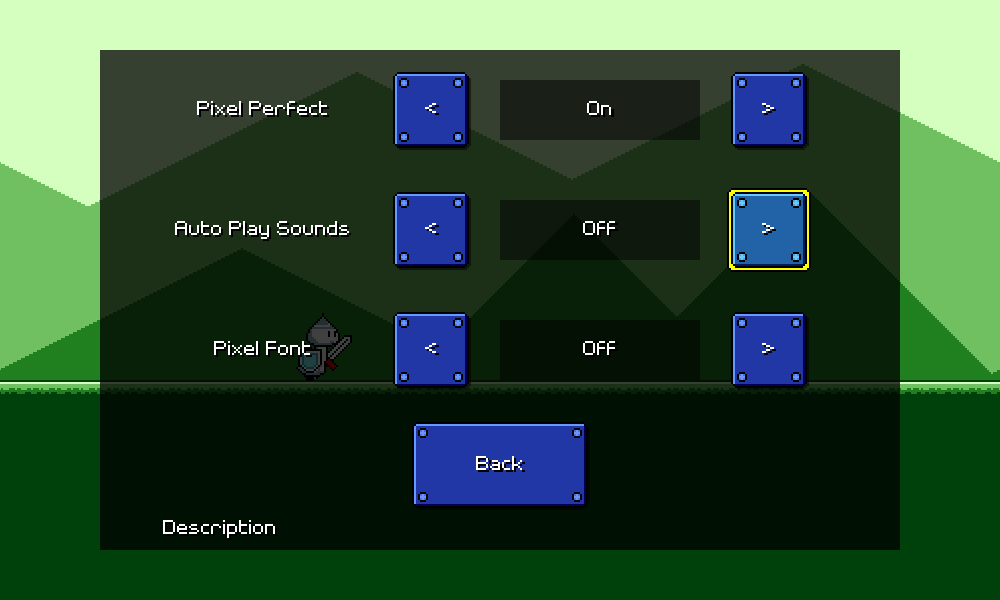

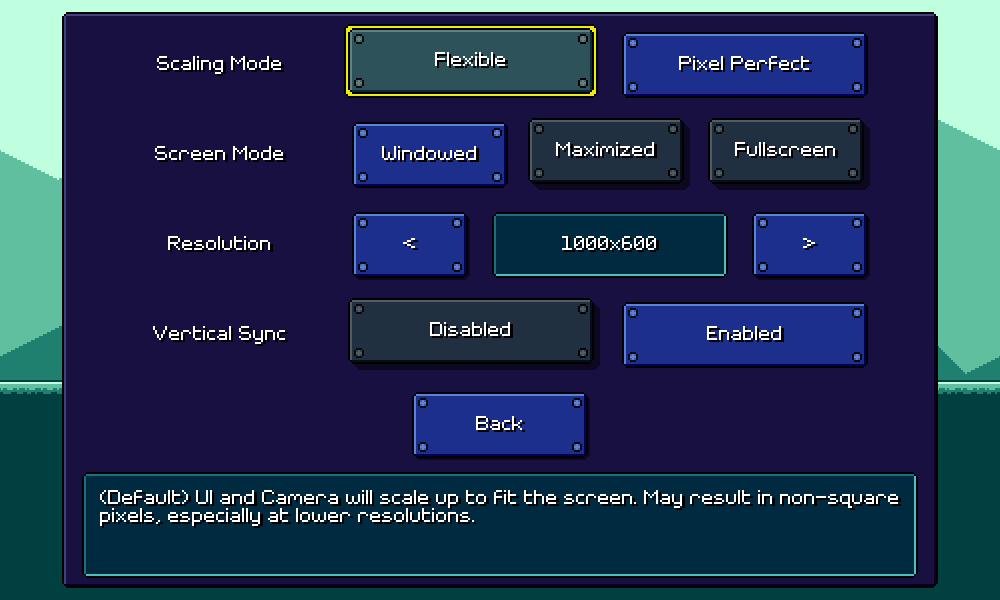

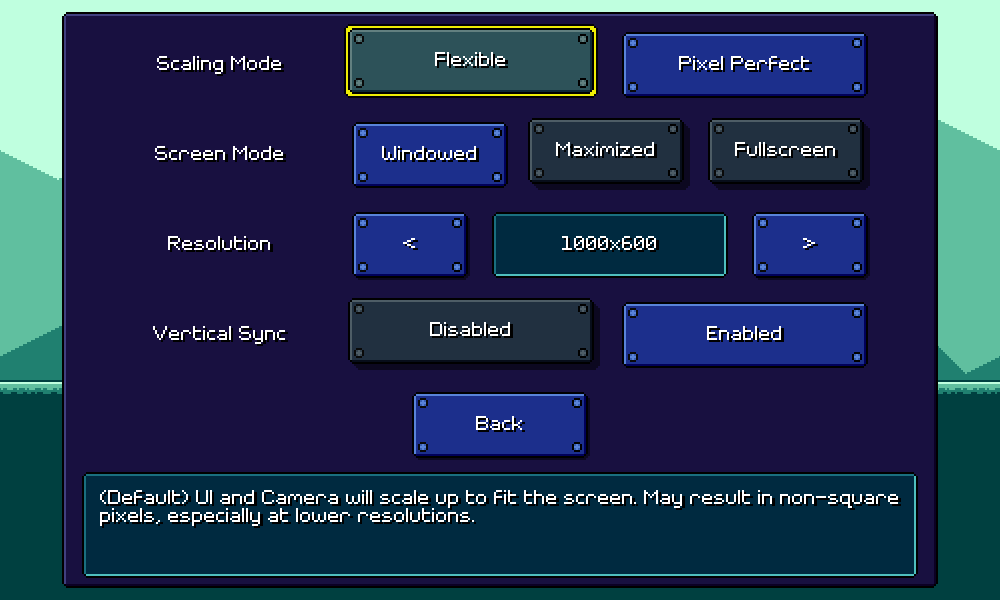

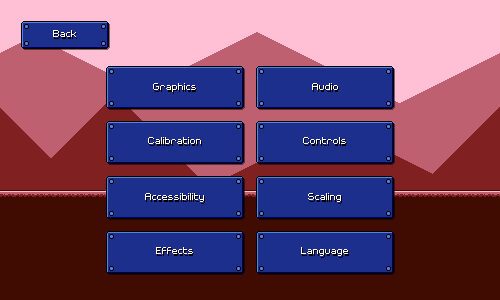

Settings MenusSo this week I've been taking a stab at reworking the settings/options UI in the menu. For reference, this is what the settings screen looked like at the beginning of the week:  It's...okay. Well, sort of. The styling is consistent with the rest of the menu and it's pretty simple to understand, so there's no problem there. You simply tap the respective button (or press enter with it highlighted) in order to swap through the choices for each setting. The problem is that this doesn't really work out for all types of settings. In particular, volume settings are much better served using some sort of left/right slider that allows you to both increment and decrement the value. Same thing with a windowed resolution selector -- it's a little awkward to have to cycle through all options unidirectionally without being able to see all of them. Some of these options would also benefit from some sort of additional explanation. A bunch of the accessibility options, for example, or maybe a toggle for whether successful audio cues are prescheduled vs dynamic. Traditionally this sort of thing is handled by having each setting row show left/right indicators that allow you to scroll through the options. You can also put in a description box for showing info about the currently-highlighted item:  This is a tried-and-true solution, but the problem here is that this UI really doesn't work well for touch/mouse interaction. For touch/mouse controlled games you generally have individual buttons for each available option, or tappable left/right buttons, as well as sliders, so everything is interactable without needing to first have a "selected row":  Again this works OK, but it's not obvious to me how to best apply keyboard navigation to this. Here was my initial attempt at trying to fuse the two navigation modes together:  ...no good. You can tell that the two navigation modes are really just fighting each other. It's kind of awkward trying to have left/right arrows that are trying to be indicators of keyboard movement, but also trying to be tappable buttons. I think we have to get rid of the concept of a "highlighted row", or at least clean up the UI so that it's reflected more clearly. Interestingly enough, Fortnite (the one easy example I could think of where a game is designed to handle all these input modes) actually does take this hybrid approach:  So it IS workable if I decide to go down this route, but I need to style my menu differently in order to get it to look right. Curiously, Fortnite didn't seem to allow me to navigate the menu with a keyboard alone -- I had to plug in a gamepad to get that style of navigation working. I guess they assume that if you're going to play with a keyboard, you're also going to be using a mouse anyways. Here's a different idea:  I actually think this is pretty promising. The keyboard/gamepad navigation experience definitely suffers a bit because navigating to these different onscreen buttons isn't the most graceful, but it's easy to understand and more importantly, follows the same UI paradigm as all of the other menus, so you don't have to learn a new system of thinking about the interface. Of course, it doesn't really make sense to have arrow buttons for binary settings that only have on-off toggles. We should just call out the two values instead, like this:  That's starting to make a lot more sense now. The description text will show information for whatever option you have your cursor hovering over, so I also like the fact that you can read about a particular choice before applying it. Of course, I'm now adding a new "darkened" state to all my buttons, so I have to refactor that logic/UI again (sigh, haha). In the end a lot of these UI screens need to be built out by hand, but it's important to reuse refactorable shared components so that this kind of change can be done without too much pain. After a bunch more tweaking and iteration, I've ended up with something that I'm pretty happy with:  The layout alignment gives the menu a very tidy feel despite the fact that there are differing elements per row. Notice also that I've given the disabled options a bit of a "raised" look with a slight offset and a more distant drop shadow, so that activating an option visually looks like pressing in a button. There's still a ton more work to be done here (all of these text labels need to be put into the localization database...), but that's all for now!

|

|

|

|

|

Logged

Logged

|

|

|

|

|

DDRKirby(ISQ)

|

|

« Reply #11 on: August 06, 2021, 05:52:16 PM » |

|

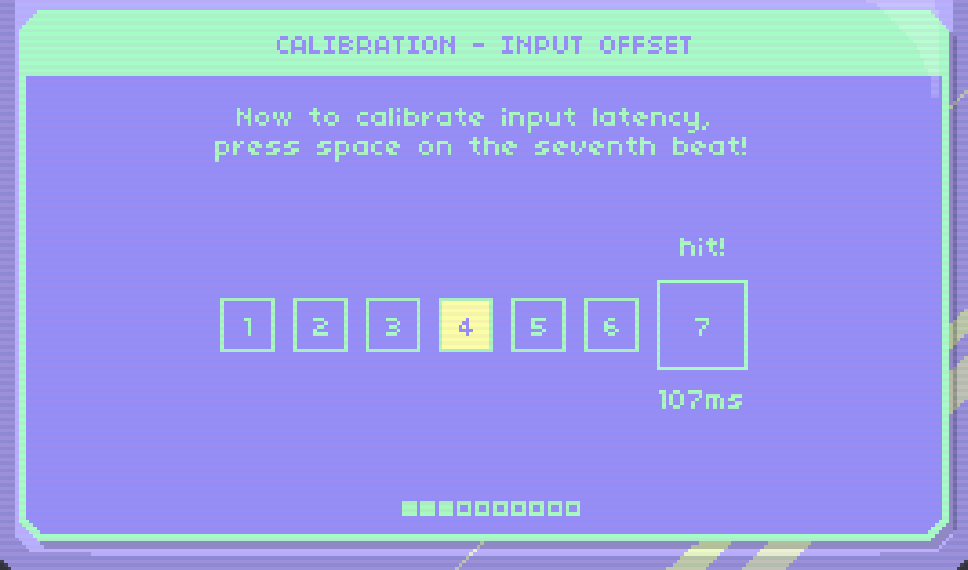

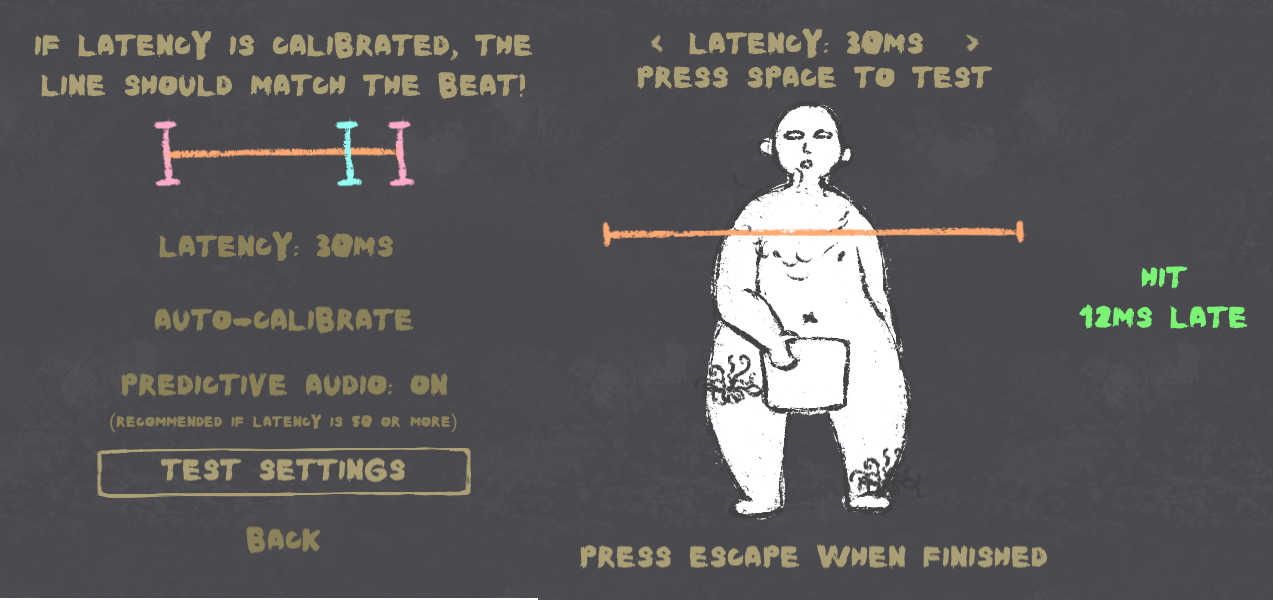

Latency CalibrationAlong with fleshing out a bunch of the settings menus, this week I worked on one of my long-standing high-priority items: a reworked latency calibration screen.  As mentioned briefly in a previous devlog, audio output always has some amount of latency/delay on every device and it's important that we be able to measure this so that we can "queue up" sound/music in advance to account for this delay. Unfortunately, the exact latency amount is different from device to device, so there is no universal measurement that works (plus, different players may perceive latency differently due to psychoacoustics, etc). Thus, we need to build some sort of latency calibration system for the player to be able to easily adjust this for themselves. Types of LatencyActually, there are three separate types of latency that are relevant to us for synchronizing music with gameplay: audio latency, visual latency, and input latency. Audio latency is the delay between playing a sound and when the sound is actually able to be heard. This is caused by various audio buffering systems, mixing delays, hardware/engine limitations, bluetooth headphone transmission time, the time it takes for sound to travel through the air, etc. Visual latency is the delay between rendering an image and when that image is actually able to be seen. This is caused by double-buffering/rendering queue systems, monitor refresh/update characteristics, etc. Input latency is the delay between a player performing an input and when that input is actually able to be handled by the game. This is caused by input debouncing / processing delays, frame-based input handling, wireless controller transmission times, other stuff in the engine, etc. Trying to minimize these latencies usually involves adjusting various engine settings, and past that, going low-level and bypassing engine functionality entirely to interface directly with the low-level platform APIs. For example, bypassing Unity's audio mixing and input processing systems will result in much lower latencies...but of course you lose out on those features (unless you re-implement them yourself). Note that usually, audio latency is the largest of the three latencies. (This is especially true on Android devices which are notorious for having high amounts of audio latency) Input and video latency are already optimized for most other games: if pressing a button does not result in an immediate visual feedback, games feel very unresponsive. And these systems do not require the same sort of mixing and buffering systems that audio does. (One notable exception to this generalization would be when playing on a video projector or something like that.) Measuring LatencyThe standard way to measure and adjust for latency is through some sort of tap test (tap to the beat, or tap to the visual indicator), or by adjusting a video/audio offset. Unfortunately, we can never measure a single type of latency by itself using a tap test. Having a user tap to an audio signal will give you the sum of audio latency + input latency. Similarly, having an user tap to a visual signal will give you the sum of video latency + input latency. Subtracting these from each other should in theory give you an audio / video offset value. Depending on the exact needs of your game, there are a couple of different ways that you can set up calibration measurements. ExamplesThe system of "audio tap test, video tap test" measurements described above definitely isn't the only way to set up a calibration system. Rhythm Doctor (a very nice one-button rhythm game!) splits calibration into two phases. In the first phase the user adjusts the video/audio offset so that both are synchronized:  In the second calibration phase, the audio/video sync has already been established, so all that's left is to determine input latency via a tap test:  It's worth noting that this "seventh beat tap test" mirrors the actual gameplay of the rest of the game, so it's very representative and should therefore hopefully be accurate. I tried to do the same thing in Samurai Shaver -- I provide a "test scene" where you can simulate actual gameplay and adjust latency to get immediate feedback on whether your calibration is working out:  Assumptions and Restrictions for Rhythm Quest Assumptions and Restrictions for Rhythm QuestRhythm Quest isn't a normal "judgment-based" rhythm game like Dance Dance Revolution, or Guitar Hero, or Arcaea, or whatever. In particular, my ability to account for visual + input latency is minimal. In traditional rhythm games, the judgment for a note can be delayed until a bit after the note has passed. When playing Guitar Hero on a calibrated setup with a lot of visual latency, for example, you hit each note as it "appears" to cross the guideline, but the note doesn't actually respond as being hit until it's travelled significantly past that point. That doesn't work as well for Rhythm Quest:  High amounts of input and visual latency can really throw off the game, to the point where normally a respawn would be triggered. Jumping is a good example of this -- in order to properly account for 100ms of input latency, by the time my code receives the jump button press, you ought to already be partway through your jump! For playing sound effects, I can work around this kind of thing just fine. Rhythm Quest (by default) preschedules hit and jump sounds at the correct times, so even with significant audio latency, they will play at the appropriate timing. Note that this also means that even if you don't press anything and miss the hit, the correct sound will still play. While this is not 100% ideal, this is an effective compromise that needed to be made in order for sfx timing to be accurate. (this technique is used in other games as well) But for visuals this doesn't work as well. If I "preschedule visual effects" then I'd have to play an animation of you slashing an enemy, only to find out 100 milliseconds later that you never actually pressed a button. "Rewinding" that visual state would be incredibly jarring. Similarly, if I tried to account for jump input latency by starting all jumps 100ms in, the jumping animation would look very jerky on startup. Given this, the solution I've chosen to go with is to assume that visual + input latency is relatively minimal and trust the player to compensate for it themselves. In fact, input delay is something present in pretty much all games to some extent, and even competitive fighting games usually frequently deal with 50-100ms of lag. Most people should hopefully adapt and adjust naturally to this sort of latency based on visual and auditory feedback. The calibration in Rhythm Quest is thus primarily focused on determining the best value for audio vs video offset. Putting It into PracticeHere's a video of the single-screen latency calibration system that I've built out so far: It's a bit busy (actual UI still not final), but the basic concept works out pretty nicely. This screen is sort of a combination tap test plus user-driven audio/video offset calibration. The top section allows the user to tap in order to establish a rhythm, and the bottom section lets the user adjust the audio offset accordingly. The end result is very immediate in that it should be very easy to tell by eye when calibration looks "locked in". (the UI will also highlight the appropriate button if it's obvious that an adjustment needs to be made) The process is also relatively quick and doesn't require you to spend a full minute tapping to a sound 16 times or whatever, which is something I'm trying to avoid, as it causes friction for people who just want to jump into the game. The design of this screen leverages the fact that the human eye is very keen at determining slight differences in timing between two flashing objects (i.e. "which of these two squares flashed first"). I actually only keep 4 tap samples (one for each square), which is very low for a tap test (usually you'd take 10 or so and throw out the min and max). However, I can get away with this because it is immediately obvious (by sight) if your taps were inconsistent in a notable way. Note that it's very important that the button tap here does NOT play sounds, as those sounds would of course be scheduled with latency, and throw off the user. The exact design of this screen will probably need to be tweaked (I probably need to hide the bottom row at first...), but so far I'm liking it much better than the standard "sit here and tap a button 10 times" design. I'm hoping that this will allow people to spend less time on this and jump into the game more quickly.

|

|

|

|

|

Logged

Logged

|

|

|

|

|

q1

|

|

« Reply #12 on: August 08, 2021, 08:29:42 AM » |

|

wow, really love how technical and detailed you've written out this devlog. gotta appreciate posts like this. love the music, and the levels look like they feel really good to play. the way you incorporated combat into the rhythm runner genre made me think of Baby Driver, which made me imagine a hardcore combat rhythm game with a Hotline Miami ish aesthetic where you pull off insane moves to the beat. even though i don't think that's what you're going for haha. i like the addition of moving enemies too. good work and good luck!

|

|

|

|

|

Logged

Logged

|

|

|

|

|

DDRKirby(ISQ)

|

|

« Reply #13 on: August 23, 2021, 03:32:05 PM » |

|

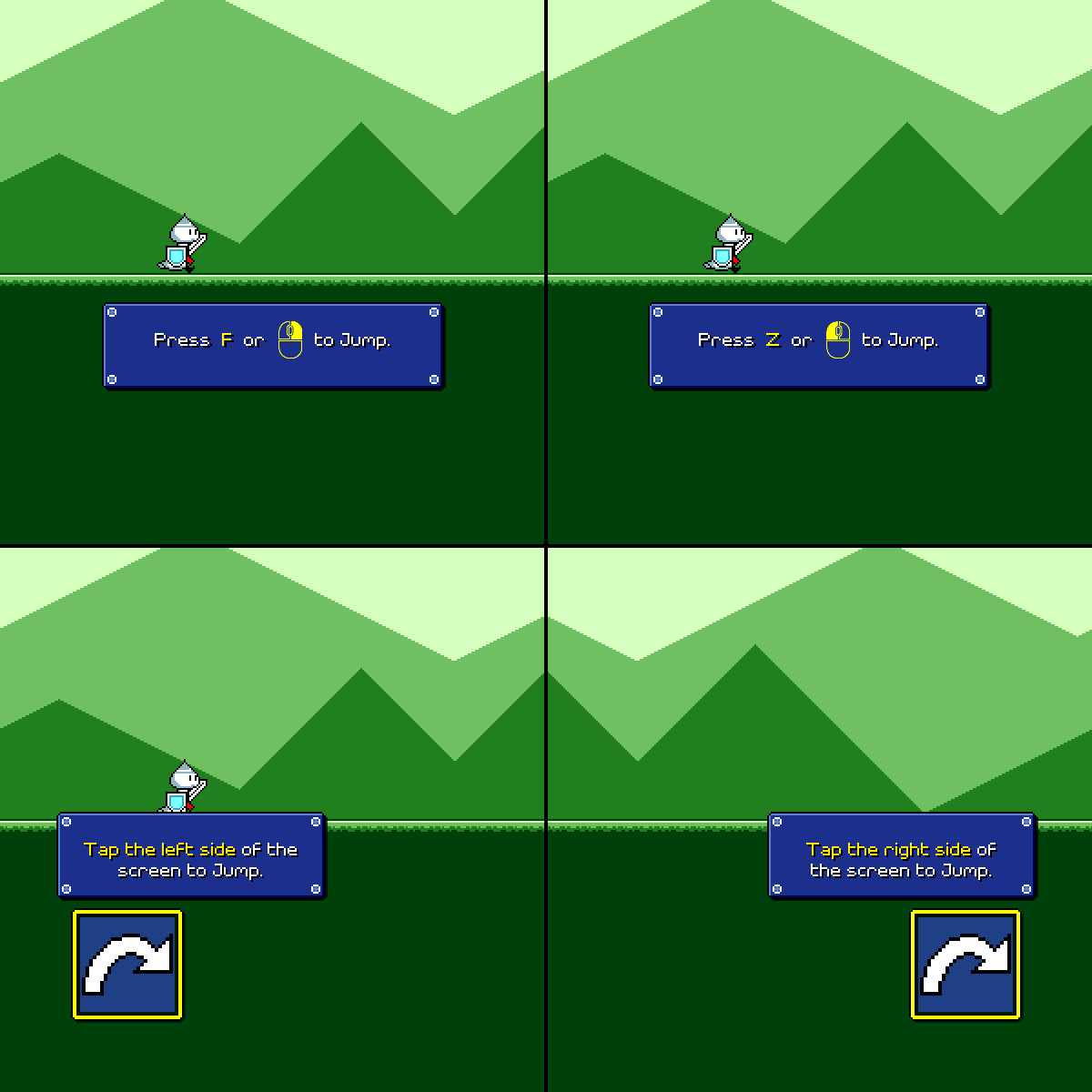

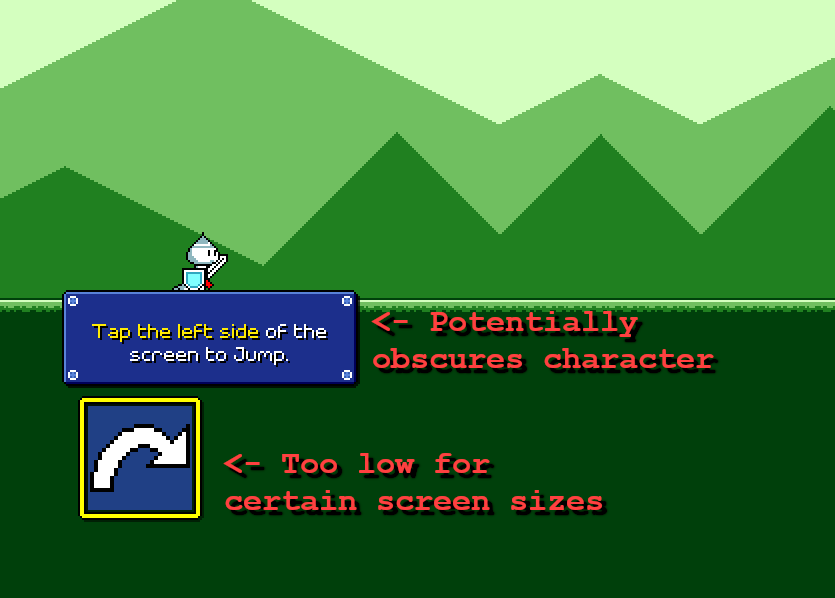

Odds and EndsI don't have any single topic in particular to write about this time, so I'll just talk a bunch of about various minor things across the project that I ended up doing instead. Controls tutorialOkay, so maybe this one isn't exactly "minor", but I finally got around to adding a tutorial for the basic controls of the game. Note that for introducing new obstacles, I already provide a short interactive "tutorial" section as part of the beginning of that level:  I like how this avoids breaking the flow of the game. I'm able to piggyback a lot of understanding off of the fact that people already understand how platformers work, so it's very intuitive to jump over spike pits or to attack enemies. However, I needed something slightly more hand-holdy in the very beginning of the game, particularly on desktop, to explain which buttons to press. Initially I was thinking of building out a separate scene for this, but I decided instead to keep in the spirit of reducing player friction and just bundled it together with the first level as a pre-level section:  Robustness RobustnessTutorial logic can tend to be a pain in the butt to implement due to many reasons -- one of them is trying to adapt the tutorial to changing keybinds and controller inputs. Consider that all of the following are different cases that need to be handled by the tutorial system depending on what input settings have been chosen by the player:  And this isn't even including gamepad support! (which I haven't added yet for the tutorial) This is one of those things that 90% of players won't even notice is implemented, and is only ever thought about when it goes wrong (so much of gamedev is like this...), but it's the right thing to do. The messy way to handle this is by cramming all of the logic into one mega-script with a whole bunch of conditional logic, something like the following: if (controlScheme == "Keyboard") {

// Show keyboard tutorial #1

} else if (controlScheme == "Touch") {

// Show touch tutorial #1

}

// Wait for player input...

if (controlScheme == "Keyboard") {

// Hide keyboard tutorial #1

} else if (controlScheme == "Touch") {

// Hide touch tutorial #1

}

...

That can certainly work for some cases, but I wanted something that was a little easier to extend, so I created a uniform abstracted "Tutorial" step interface, where each tutorial "step" is an abstract object that can be told to show or hide. Then, I have platform-specific child objects that are only enabled for a specific input method, and key off of the parent's "show/hide" progress in order to render themselves. Something roughly like this: // In the main controlling script:

Tutorial1.Show();

// Wait for player input...

Tutorial1.Hide();

// In the Tutorial1 script:

public void Show() {

// Fade our alpha value to 1.

LeanTween.alphaCanvas(Canvas, 1.0f, 1.0f);

}

// In the child:

void Update() {

// Key our position off of the alpha value in some way?

_transform.anchoredPosition = Vector2.Lerp(_offset, Vector2.zero, _tutorial.Canvas.Alpha);

}